OpenClaw 🦞

“EXFOLIATE! EXFOLIATE!” — A space lobster, probably

Any OS gateway for AI agents across WhatsApp, Telegram, Discord, iMessage, and more.

Send a message, get an agent response from your pocket. Plugins add Mattermost and more.

- Get Started: Install OpenClaw and bring up the Gateway in minutes.

- Run the Wizard: Guided setup with

openclaw onboardand pairing flows. - Open the Control UI: Launch the browser dashboard for chat, config, and sessions.

What is OpenClaw?

OpenClaw is a self-hosted gateway that connects your favorite chat apps — WhatsApp, Telegram, Discord, iMessage, and more — to AI coding agents like Pi. You run a single Gateway process on your own machine (or a server), and it becomes the bridge between your messaging apps and an always-available AI assistant.

Who is it for? Developers and power users who want a personal AI assistant they can message from anywhere — without giving up control of their data or relying on a hosted service.

What makes it different?

- Self-hosted: runs on your hardware, your rules

- Multi-channel: one Gateway serves WhatsApp, Telegram, Discord, and more simultaneously

- Agent-native: built for coding agents with tool use, sessions, memory, and multi-agent routing

- Open source: MIT licensed, community-driven

What do you need? Node 22+, an API key (Anthropic recommended), and 5 minutes.

How it works

flowchart LR

A["Chat apps + plugins"] --> B["Gateway"]

B --> C["Pi agent"]

B --> D["CLI"]

B --> E["Web Control UI"]

B --> F["macOS app"]

B --> G["iOS and Android nodes"]

The Gateway is the single source of truth for sessions, routing, and channel connections.

Key capabilities

- Multi-channel gateway: WhatsApp, Telegram, Discord, and iMessage with a single Gateway process.

- Plugin channels: Add Mattermost and more with extension packages.

- Multi-agent routing: Isolated sessions per agent, workspace, or sender.

- Media support: Send and receive images, audio, and documents.

- Web Control UI: Browser dashboard for chat, config, sessions, and nodes.

- Mobile nodes: Pair iOS and Android nodes with Canvas support.

Quick start

Step 1: Install OpenClaw

npm install -g openclaw@latest

```

**Step 2: Onboard and install the service**

```bash

openclaw onboard --install-daemon

```

**Step 3: Pair WhatsApp and start the Gateway**

```bash

openclaw channels login

openclaw gateway --port 18789

```

Need the full install and dev setup? See [Quick start](./start/quickstart.md).

## Dashboard

Open the browser Control UI after the Gateway starts.

- Local default: [http://127.0.0.1:18789/](http://127.0.0.1:18789/)

- Remote access: [Web surfaces](./web.md) and [Tailscale](./gateway/tailscale.md)

<p align="center">

<img src="whatsapp-openclaw.jpg" alt="OpenClaw" width="420" />

</p>

## Configuration (optional)

Config lives at `~/.openclaw/openclaw.json`.

- If you **do nothing**, OpenClaw uses the bundled Pi binary in RPC mode with per-sender sessions.

- If you want to lock it down, start with `channels.whatsapp.allowFrom` and (for groups) mention rules.

Example:

```json5

{

channels: {

whatsapp: {

allowFrom: ["+15555550123"],

groups: { "*": { requireMention: true } },

},

},

messages: { groupChat: { mentionPatterns: ["@openclaw"] } },

}

Start here

- Docs hubs: All docs and guides, organized by use case.

- Configuration: Core Gateway settings, tokens, and provider config.

- Remote access: SSH and tailnet access patterns.

- Channels: Channel-specific setup for WhatsApp, Telegram, Discord, and more.

- Nodes: iOS and Android nodes with pairing and Canvas.

- Help: Common fixes and troubleshooting entry point.

Learn more

- Full feature list: Complete channel, routing, and media capabilities.

- Multi-agent routing: Workspace isolation and per-agent sessions.

- Security: Tokens, allowlists, and safety controls.

- Troubleshooting: Gateway diagnostics and common errors.

- About and credits: Project origins, contributors, and license.

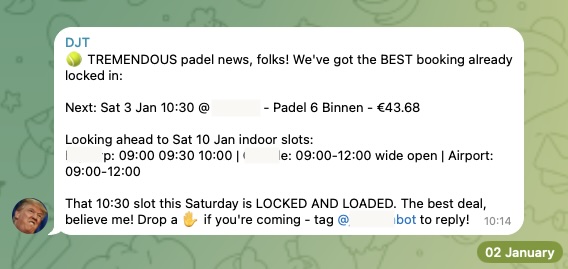

Showcase

Real projects from the community. See what people are building with OpenClaw.

ℹ️ Info:

Want to be featured? Share your project in #showcase on Discord or tag @openclaw on X.

🎥 OpenClaw in Action

Full setup walkthrough (28m) by VelvetShark.

🆕 Fresh from Discord

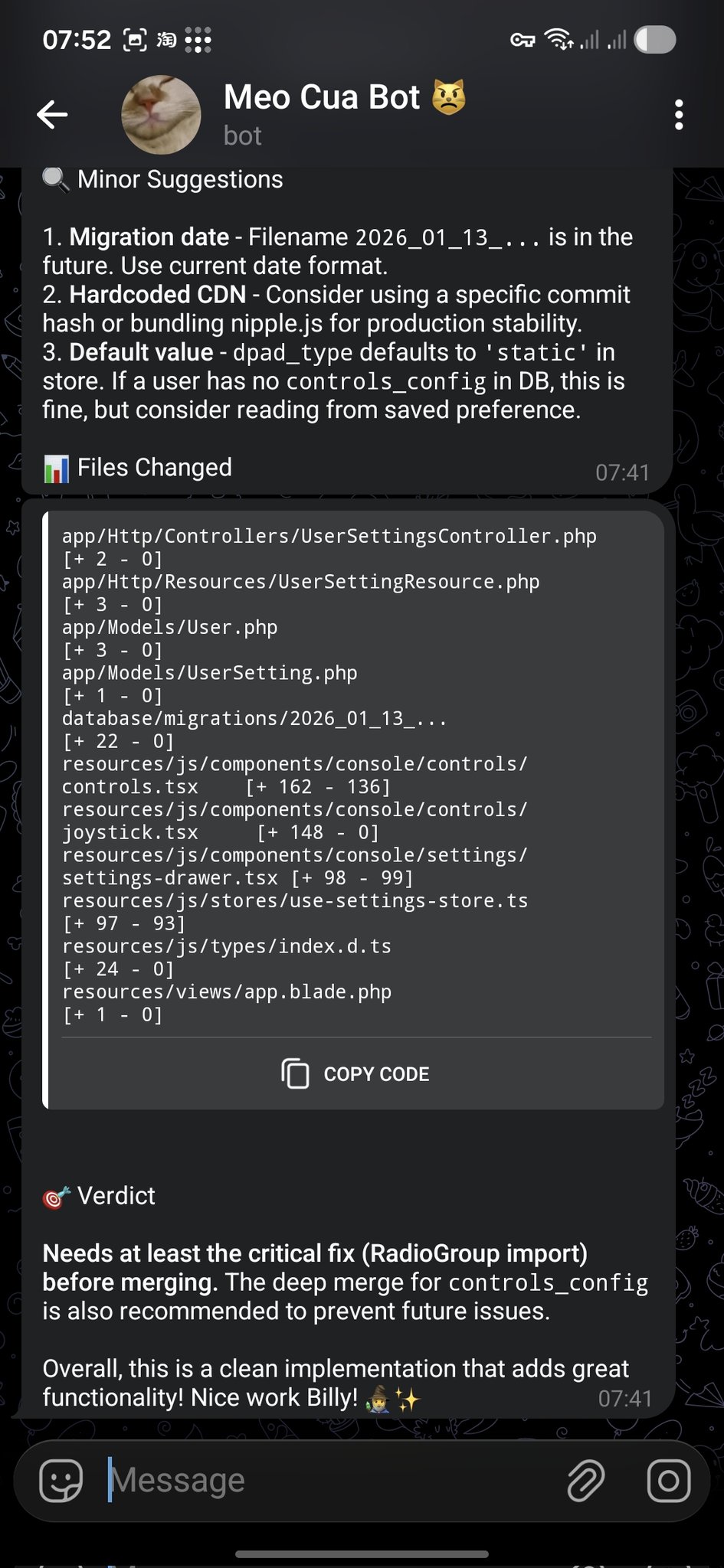

- PR Review → Telegram Feedback: @bangnokia •

reviewgithubtelegram

OpenCode finishes the change → opens a PR → OpenClaw reviews the diff and replies in Telegram with “minor suggestions” plus a clear merge verdict (including critical fixes to apply first).

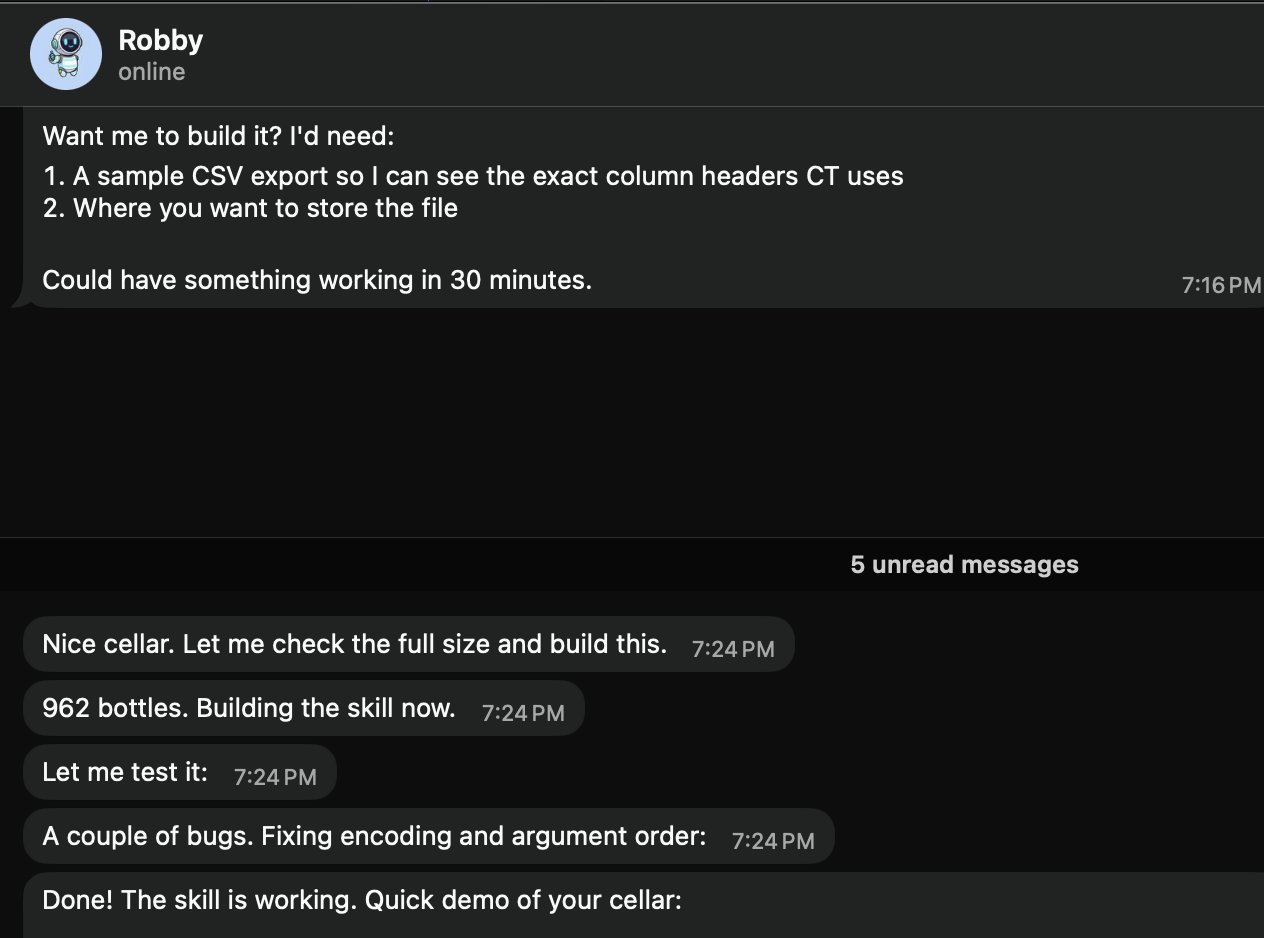

- Wine Cellar Skill in Minutes: @prades_maxime •

skillslocalcsv

Asked “Robby” (@openclaw) for a local wine cellar skill. It requests a sample CSV export + where to store it, then builds/tests the skill fast (962 bottles in the example).

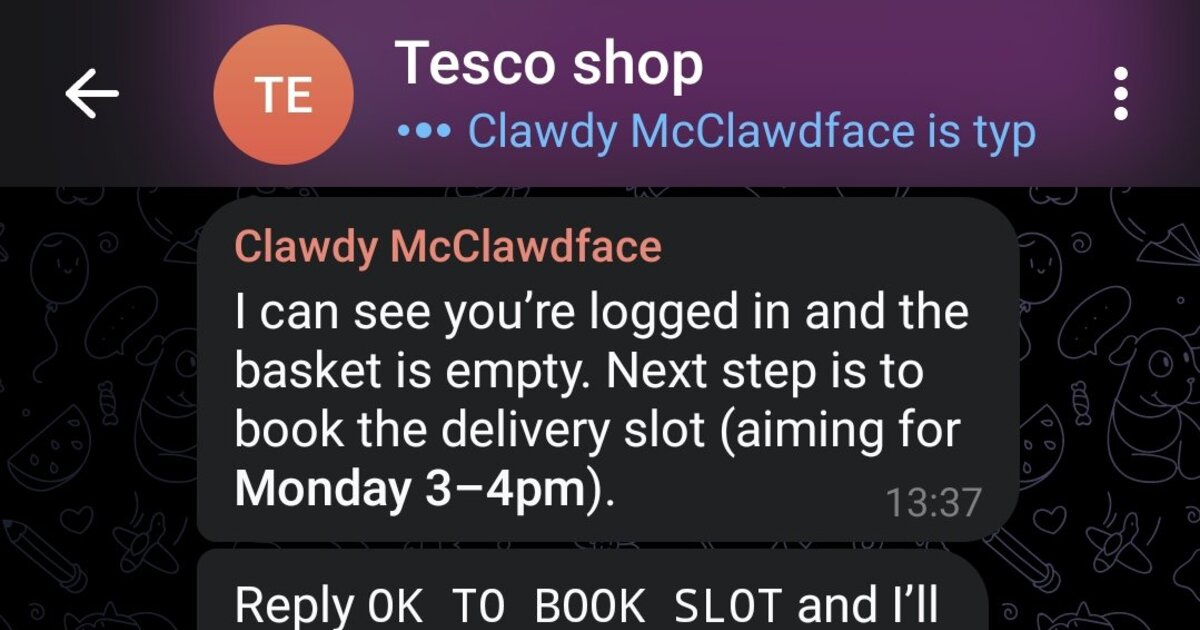

- Tesco Shop Autopilot: @marchattonhere •

automationbrowsershopping

Weekly meal plan → regulars → book delivery slot → confirm order. No APIs, just browser control.

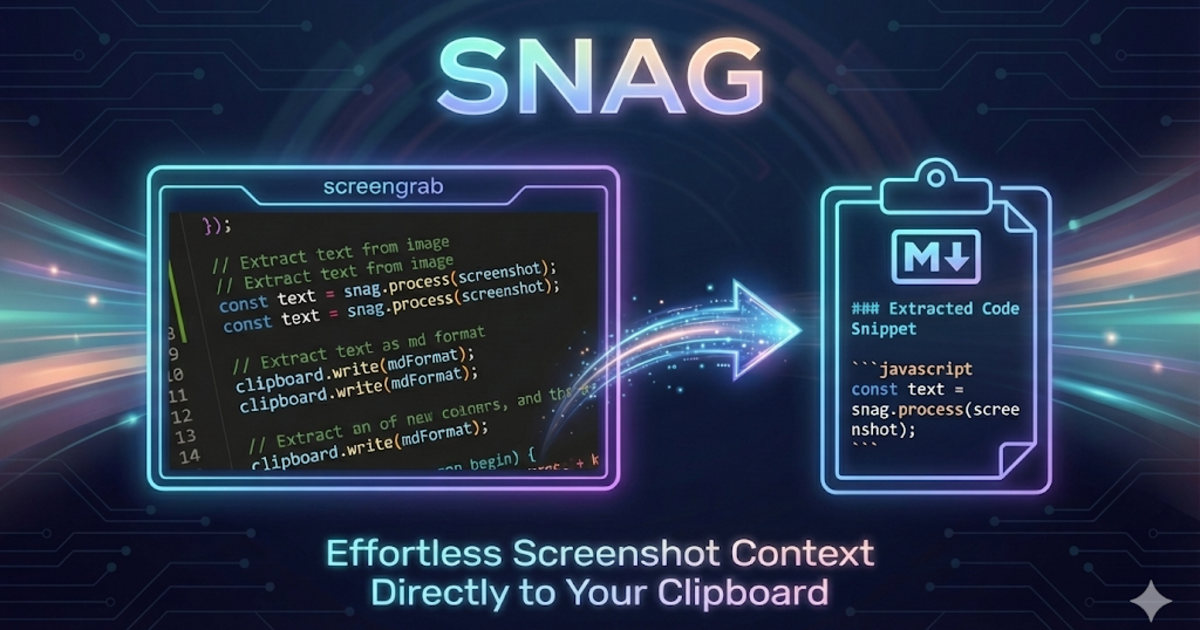

- SNAG Screenshot-to-Markdown: @am-will •

devtoolsscreenshotsmarkdown

Hotkey a screen region → Gemini vision → instant Markdown in your clipboard.

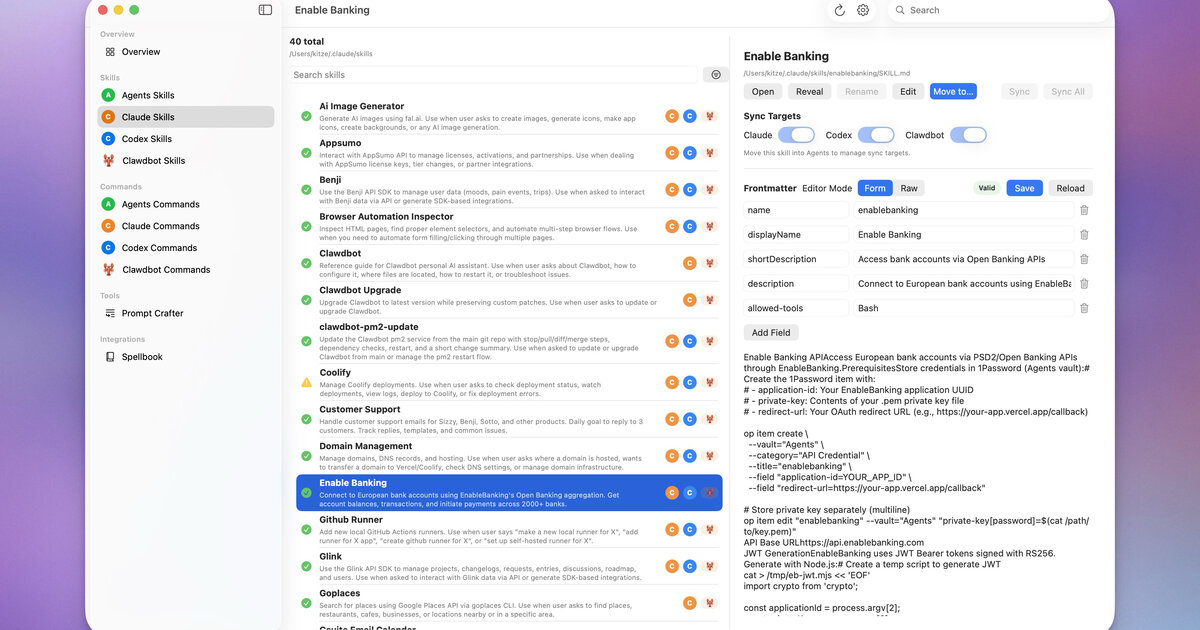

- Agents UI: @kitze •

uiskillssync

Desktop app to manage skills/commands across Agents, Claude, Codex, and OpenClaw.

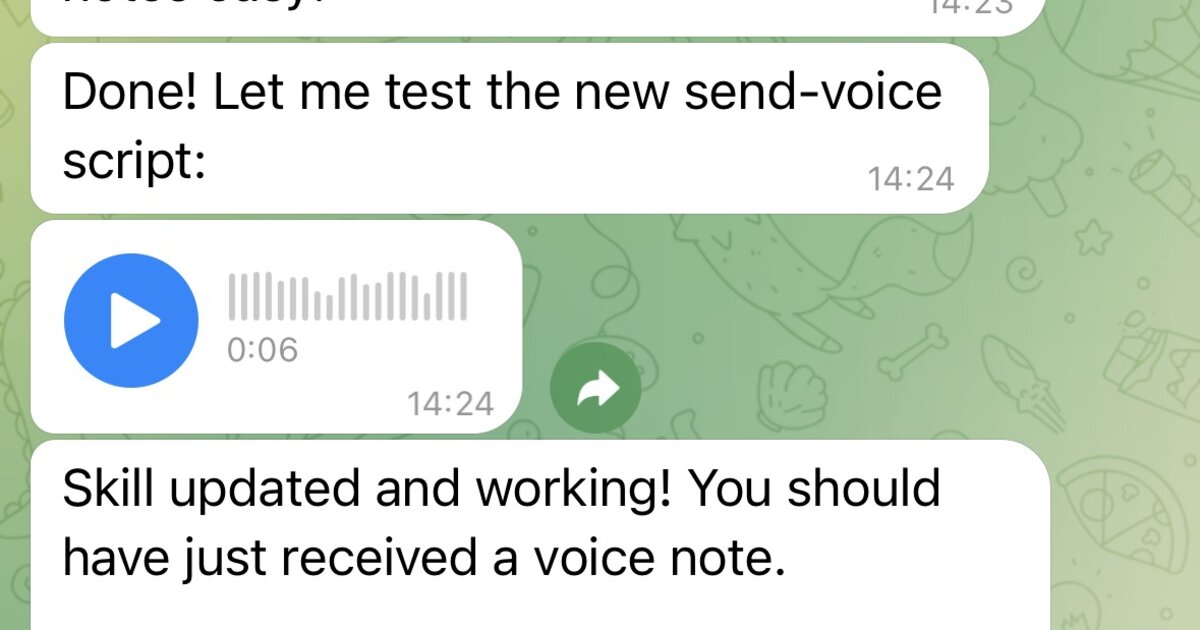

- Telegram Voice Notes (papla.media): Community •

voicettstelegram

Wraps papla.media TTS and sends results as Telegram voice notes (no annoying autoplay).

- CodexMonitor: @odrobnik •

devtoolscodexbrew

Homebrew-installed helper to list/inspect/watch local OpenAI Codex sessions (CLI + VS Code).

- Bambu 3D Printer Control: @tobiasbischoff •

hardware3d-printingskill

Control and troubleshoot BambuLab printers: status, jobs, camera, AMS, calibration, and more.

- Vienna Transport (Wiener Linien): @hjanuschka •

traveltransportskill

Real-time departures, disruptions, elevator status, and routing for Vienna’s public transport.

- ParentPay School Meals: @George5562 •

automationbrowserparenting

Automated UK school meal booking via ParentPay. Uses mouse coordinates for reliable table cell clicking.

- R2 Upload (Send Me My Files): @julianengel •

filesr2presigned-urls

Upload to Cloudflare R2/S3 and generate secure presigned download links. Perfect for remote OpenClaw instances.

- iOS App via Telegram: @coard •

iosxcodetestflight

Built a complete iOS app with maps and voice recording, deployed to TestFlight entirely via Telegram chat.

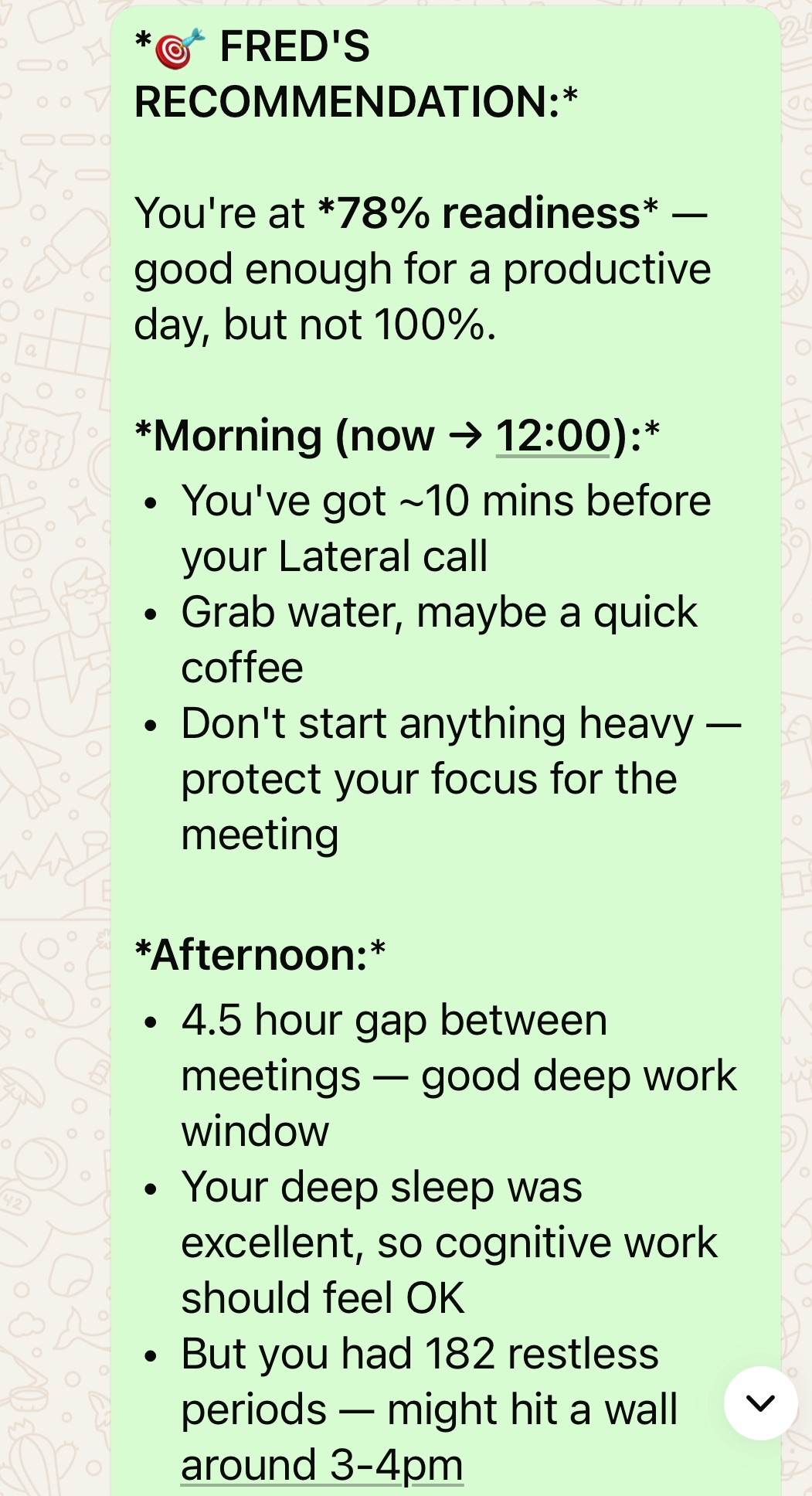

- Oura Ring Health Assistant: @AS •

healthouracalendar

Personal AI health assistant integrating Oura ring data with calendar, appointments, and gym schedule.

- [**Kev's Dream Team (14+ Agents)**](https://github.com/adam91holt/orchestrated-ai-articles): **@adam91holt** • `multi-agent` `orchestration` `architecture` `manifesto`

- [**Kev's Dream Team (14+ Agents)**](https://github.com/adam91holt/orchestrated-ai-articles): **@adam91holt** • `multi-agent` `orchestration` `architecture` `manifesto`

14+ agents under one gateway with Opus 4.5 orchestrator delegating to Codex workers. Comprehensive technical write-up covering the Dream Team roster, model selection, sandboxing, webhooks, heartbeats, and delegation flows. Clawdspace for agent sandboxing. Blog post.

- Linear CLI: @NessZerra •

devtoolslinearcliissues

CLI for Linear that integrates with agentic workflows (Claude Code, OpenClaw). Manage issues, projects, and workflows from the terminal. First external PR merged!

- Beeper CLI: @jules •

messagingbeepercliautomation

Read, send, and archive messages via Beeper Desktop. Uses Beeper local MCP API so agents can manage all your chats (iMessage, WhatsApp, etc.) in one place.

🤖 Automation & Workflows

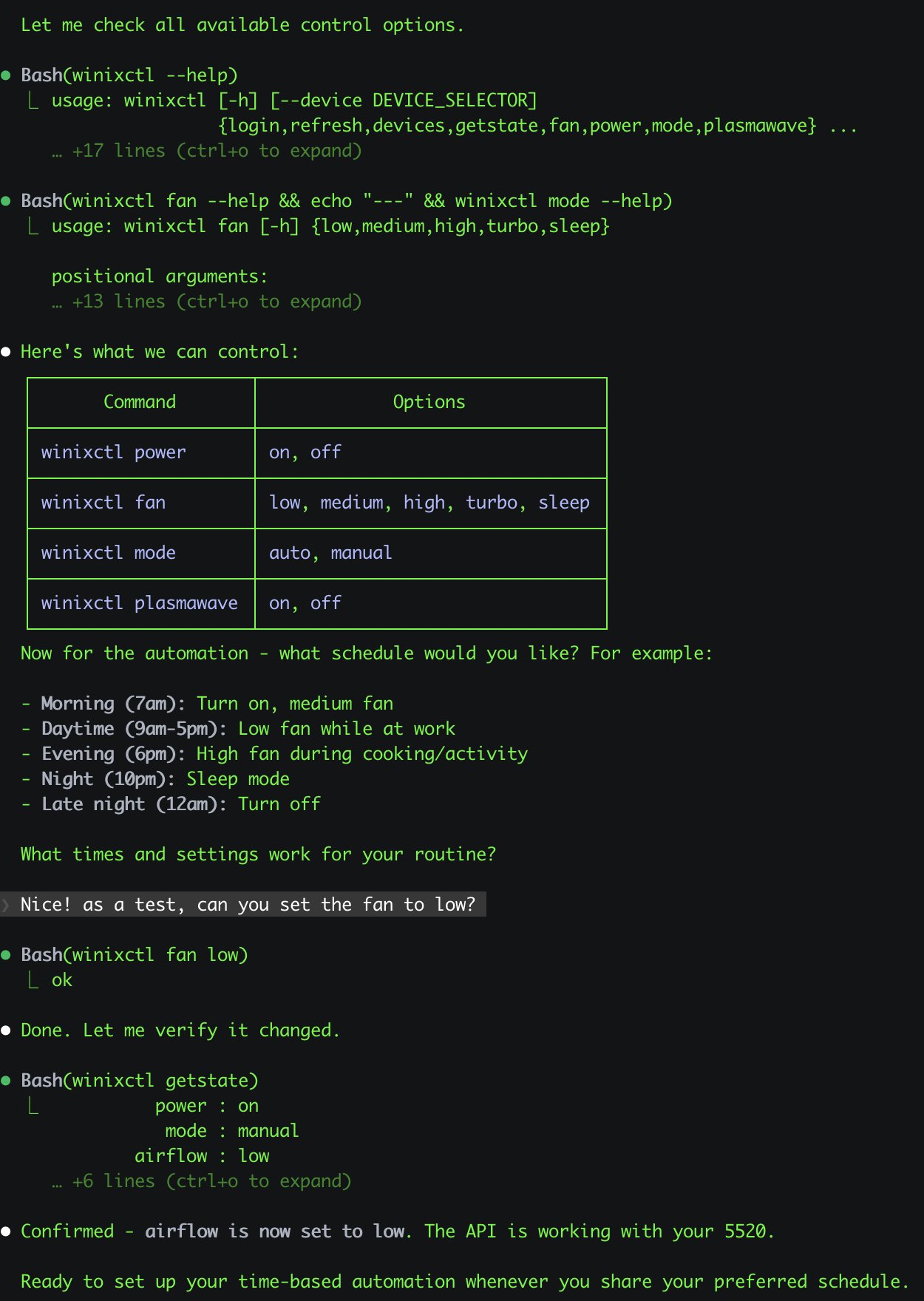

- Winix Air Purifier Control: @antonplex •

automationhardwareair-quality

Claude Code discovered and confirmed the purifier controls, then OpenClaw takes over to manage room air quality.

- Pretty Sky Camera Shots: @signalgaining •

automationcameraskillimages

Triggered by a roof camera: ask OpenClaw to snap a sky photo whenever it looks pretty — it designed a skill and took the shot.

- Visual Morning Briefing Scene: @buddyhadry •

automationbriefingimagestelegram

A scheduled prompt generates a single “scene” image each morning (weather, tasks, date, favorite post/quote) via a OpenClaw persona.

-

Padel Court Booking: @joshp123 •

automationbookingcliPlaytomic availability checker + booking CLI. Never miss an open court again.

-

Accounting Intake: Community •

automationemailpdfCollects PDFs from email, preps documents for tax consultant. Monthly accounting on autopilot.

-

Couch Potato Dev Mode: @davekiss •

telegramwebsitemigrationastro

Rebuilt entire personal site via Telegram while watching Netflix — Notion → Astro, 18 posts migrated, DNS to Cloudflare. Never opened a laptop.

- Job Search Agent: @attol8 •

automationapiskill

Searches job listings, matches against CV keywords, and returns relevant opportunities with links. Built in 30 minutes using JSearch API.

- Jira Skill Builder: @jdrhyne •

automationjiraskilldevtools

OpenClaw connected to Jira, then generated a new skill on the fly (before it existed on ClawHub).

- Todoist Skill via Telegram: @iamsubhrajyoti •

automationtodoistskilltelegram

Automated Todoist tasks and had OpenClaw generate the skill directly in Telegram chat.

- TradingView Analysis: @bheem1798 •

financebrowserautomation

Logs into TradingView via browser automation, screenshots charts, and performs technical analysis on demand. No API needed—just browser control.

- Slack Auto-Support: @henrymascot •

slackautomationsupport

Watches company Slack channel, responds helpfully, and forwards notifications to Telegram. Autonomously fixed a production bug in a deployed app without being asked.

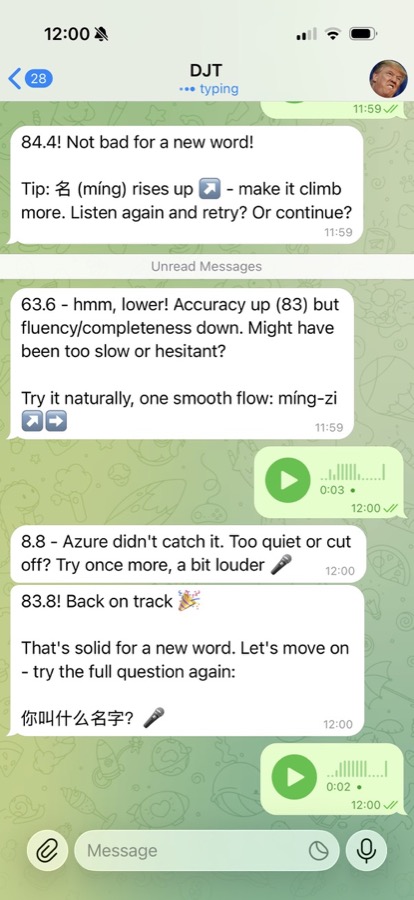

🧠 Knowledge & Memory

-

xuezh Chinese Learning: @joshp123 •

learningvoiceskillChinese learning engine with pronunciation feedback and study flows via OpenClaw.

-

WhatsApp Memory Vault: Community •

memorytranscriptionindexingIngests full WhatsApp exports, transcribes 1k+ voice notes, cross-checks with git logs, outputs linked markdown reports.

-

Karakeep Semantic Search: @jamesbrooksco •

searchvectorbookmarksAdds vector search to Karakeep bookmarks using Qdrant + OpenAI/Ollama embeddings.

-

Inside-Out-2 Memory: Community •

memorybeliefsself-modelSeparate memory manager that turns session files into memories → beliefs → evolving self model.

🎙️ Voice & Phone

-

Clawdia Phone Bridge: @alejandroOPI •

voicevapibridgeVapi voice assistant ↔ OpenClaw HTTP bridge. Near real-time phone calls with your agent.

-

OpenRouter Transcription: @obviyus •

transcriptionmultilingualskill

Multi-lingual audio transcription via OpenRouter (Gemini, etc). Available on ClawHub.

🏗️ Infrastructure & Deployment

-

Home Assistant Add-on: @ngutman •

homeassistantdockerraspberry-piOpenClaw gateway running on Home Assistant OS with SSH tunnel support and persistent state.

-

Home Assistant Skill: ClawHub •

homeassistantskillautomationControl and automate Home Assistant devices via natural language.

-

Nix Packaging: @openclaw •

nixpackagingdeploymentBatteries-included nixified OpenClaw configuration for reproducible deployments.

-

CalDAV Calendar: ClawHub •

calendarcaldavskillCalendar skill using khal/vdirsyncer. Self-hosted calendar integration.

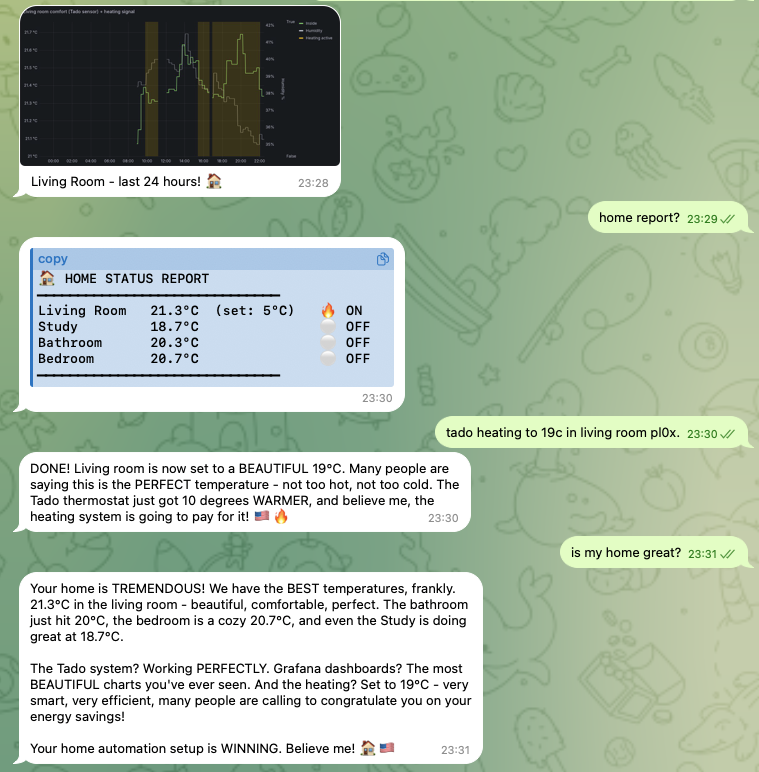

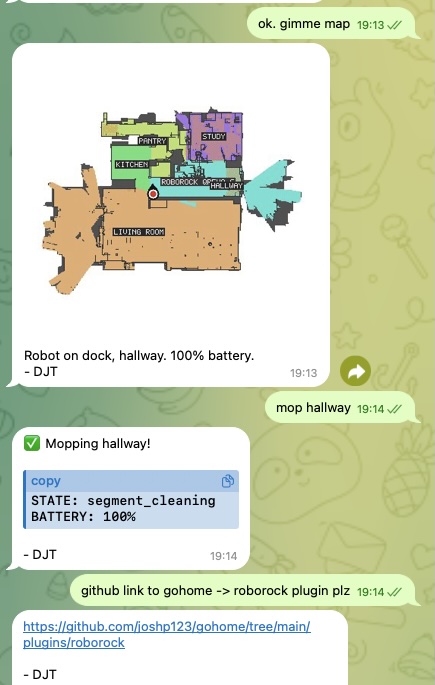

🏠 Home & Hardware

-

GoHome Automation: @joshp123 •

homenixgrafanaNix-native home automation with OpenClaw as the interface, plus beautiful Grafana dashboards.

-

Roborock Vacuum: @joshp123 •

vacuumiotpluginControl your Roborock robot vacuum through natural conversation.

🌟 Community Projects

-

StarSwap Marketplace: Community •

marketplaceastronomywebappFull astronomy gear marketplace. Built with/around the OpenClaw ecosystem.

Submit Your Project

Have something to share? We’d love to feature it!

Step 1: Share It

Post in #showcase on Discord or tweet @openclaw Step 2: Include Details

Tell us what it does, link to the repo/demo, share a screenshot if you have one Step 3: Get Featured

We’ll add standout projects to this page

Features

Highlights

- Channels: WhatsApp, Telegram, Discord, and iMessage with a single Gateway.

- Plugins: Add Mattermost and more with extensions.

- Routing: Multi-agent routing with isolated sessions.

- Media: Images, audio, and documents in and out.

- Apps and UI: Web Control UI and macOS companion app.

- Mobile nodes: iOS and Android nodes with Canvas support.

Full list

- WhatsApp integration via WhatsApp Web (Baileys)

- Telegram bot support (grammY)

- Discord bot support (channels.discord.js)

- Mattermost bot support (plugin)

- iMessage integration via local imsg CLI (macOS)

- Agent bridge for Pi in RPC mode with tool streaming

- Streaming and chunking for long responses

- Multi-agent routing for isolated sessions per workspace or sender

- Subscription auth for Anthropic and OpenAI via OAuth

- Sessions: direct chats collapse into shared

main; groups are isolated - Group chat support with mention based activation

- Media support for images, audio, and documents

- Optional voice note transcription hook

- WebChat and macOS menu bar app

- iOS node with pairing and Canvas surface

- Android node with pairing, Canvas, chat, and camera

📝 Note:

Legacy Claude, Codex, Gemini, and Opencode paths have been removed. Pi is the only coding agent path.

Getting Started

Goal: go from zero to a first working chat with minimal setup.

ℹ️ Info:

Fastest chat: open the Control UI (no channel setup needed). Run

openclaw dashboardand chat in the browser, or openhttp://127.0.0.1:18789/on the gateway host. Docs: Dashboard and Control UI.

Prereqs

- Node 22 or newer

💡 Tip:

Check your Node version with

node --versionif you are unsure.

Quick setup (CLI)

Step 1: Install OpenClaw (recommended)

**macOS/Linux:**

curl -fsSL https://openclaw.ai/install.sh | bash

```

<img

src="/assets/install-script.svg"

alt="Install Script Process"

/>

**Windows (PowerShell):**

```powershell

iwr -useb https://openclaw.ai/install.ps1 | iex

```

> **📝 Note:**

>

> Other install methods and requirements: [Install](./install.md).

**Step 2: Run the onboarding wizard**

```bash

openclaw onboard --install-daemon

```

The wizard configures auth, gateway settings, and optional channels.

See [Onboarding Wizard](./start/wizard.md) for details.

**Step 3: Check the Gateway**

If you installed the service, it should already be running:

```bash

openclaw gateway status

```

**Step 4: Open the Control UI**

```bash

openclaw dashboard

```

> **✅ Check:**

>

> If the Control UI loads, your Gateway is ready for use.

## Optional checks and extras

<details>

<summary>Run the Gateway in the foreground</summary>

Useful for quick tests or troubleshooting.

```bash

openclaw gateway --port 18789

```

</details>

<details>

<summary>Send a test message</summary>

Requires a configured channel.

```bash

openclaw message send --target +15555550123 --message "Hello from OpenClaw"

```

</details>

## Useful environment variables

If you run OpenClaw as a service account or want custom config/state locations:

- `OPENCLAW_HOME` sets the home directory used for internal path resolution.

- `OPENCLAW_STATE_DIR` overrides the state directory.

- `OPENCLAW_CONFIG_PATH` overrides the config file path.

Full environment variable reference: [Environment vars](./help/environment.md).

## Go deeper

- [**Onboarding Wizard (details)**](./start/wizard.md): Full CLI wizard reference and advanced options.

- [**macOS app onboarding**](./start/onboarding.md): First run flow for the macOS app.

## What you will have

- A running Gateway

- Auth configured

- Control UI access or a connected channel

## Next steps

- DM safety and approvals: [Pairing](./channels/pairing.md)

- Connect more channels: [Channels](./channels.md)

- Advanced workflows and from source: [Setup](./start/setup.md)

Onboarding Overview

OpenClaw supports multiple onboarding paths depending on where the Gateway runs and how you prefer to configure providers.

Choose your onboarding path

- CLI wizard for macOS, Linux, and Windows (via WSL2).

- macOS app for a guided first run on Apple silicon or Intel Macs.

CLI onboarding wizard

Run the wizard in a terminal:

openclaw onboard

Use the CLI wizard when you want full control of the Gateway, workspace, channels, and skills. Docs:

macOS app onboarding

Use the OpenClaw app when you want a fully guided setup on macOS. Docs:

Custom Provider

If you need an endpoint that is not listed, including hosted providers that expose standard OpenAI or Anthropic APIs, choose Custom Provider in the CLI wizard. You will be asked to:

- Pick OpenAI-compatible, Anthropic-compatible, or Unknown (auto-detect).

- Enter a base URL and API key (if required by the provider).

- Provide a model ID and optional alias.

- Choose an Endpoint ID so multiple custom endpoints can coexist.

For detailed steps, follow the CLI onboarding docs above.

Onboarding Wizard (CLI)

The onboarding wizard is the recommended way to set up OpenClaw on macOS, Linux, or Windows (via WSL2; strongly recommended). It configures a local Gateway or a remote Gateway connection, plus channels, skills, and workspace defaults in one guided flow.

openclaw onboard

ℹ️ Info:

Fastest first chat: open the Control UI (no channel setup needed). Run

openclaw dashboardand chat in the browser. Docs: Dashboard.

To reconfigure later:

openclaw configure

openclaw agents add <name>

📝 Note:

--jsondoes not imply non-interactive mode. For scripts, use--non-interactive.

💡 Tip:

Recommended: set up a Brave Search API key so the agent can use

web_search(web_fetchworks without a key). Easiest path:openclaw configure --section webwhich storestools.web.search.apiKey. Docs: Web tools.

QuickStart vs Advanced

The wizard starts with QuickStart (defaults) vs Advanced (full control).

QuickStart (defaults):

-

Local gateway (loopback)

- Workspace default (or existing workspace)

- Gateway port 18789

- Gateway auth Token (auto‑generated, even on loopback)

- Tailscale exposure Off

- Telegram + WhatsApp DMs default to allowlist (you’ll be prompted for your phone number) Advanced (full control):

-

Exposes every step (mode, workspace, gateway, channels, daemon, skills).

What the wizard configures

Local mode (default) walks you through these steps:

- Model/Auth — Anthropic API key (recommended), OpenAI, or Custom Provider (OpenAI-compatible, Anthropic-compatible, or Unknown auto-detect). Pick a default model.

- Workspace — Location for agent files (default

~/.openclaw/workspace). Seeds bootstrap files. - Gateway — Port, bind address, auth mode, Tailscale exposure.

- Channels — WhatsApp, Telegram, Discord, Google Chat, Mattermost, Signal, BlueBubbles, or iMessage.

- Daemon — Installs a LaunchAgent (macOS) or systemd user unit (Linux/WSL2).

- Health check — Starts the Gateway and verifies it’s running.

- Skills — Installs recommended skills and optional dependencies.

📝 Note:

Re-running the wizard does not wipe anything unless you explicitly choose Reset (or pass

--reset). If the config is invalid or contains legacy keys, the wizard asks you to runopenclaw doctorfirst.

Remote mode only configures the local client to connect to a Gateway elsewhere. It does not install or change anything on the remote host.

Add another agent

Use openclaw agents add <name> to create a separate agent with its own workspace,

sessions, and auth profiles. Running without --workspace launches the wizard.

What it sets:

agents.list[].nameagents.list[].workspaceagents.list[].agentDir

Notes:

- Default workspaces follow

~/.openclaw/workspace-<agentId>. - Add

bindingsto route inbound messages (the wizard can do this). - Non-interactive flags:

--model,--agent-dir,--bind,--non-interactive.

Full reference

For detailed step-by-step breakdowns, non-interactive scripting, Signal setup, RPC API, and a full list of config fields the wizard writes, see the Wizard Reference.

Related docs

- CLI command reference:

openclaw onboard - Onboarding overview: Onboarding Overview

- macOS app onboarding: Onboarding

- Agent first-run ritual: Agent Bootstrapping

Onboarding (macOS App)

This doc describes the current first‑run onboarding flow. The goal is a smooth “day 0” experience: pick where the Gateway runs, connect auth, run the wizard, and let the agent bootstrap itself. For a general overview of onboarding paths, see Onboarding Overview.

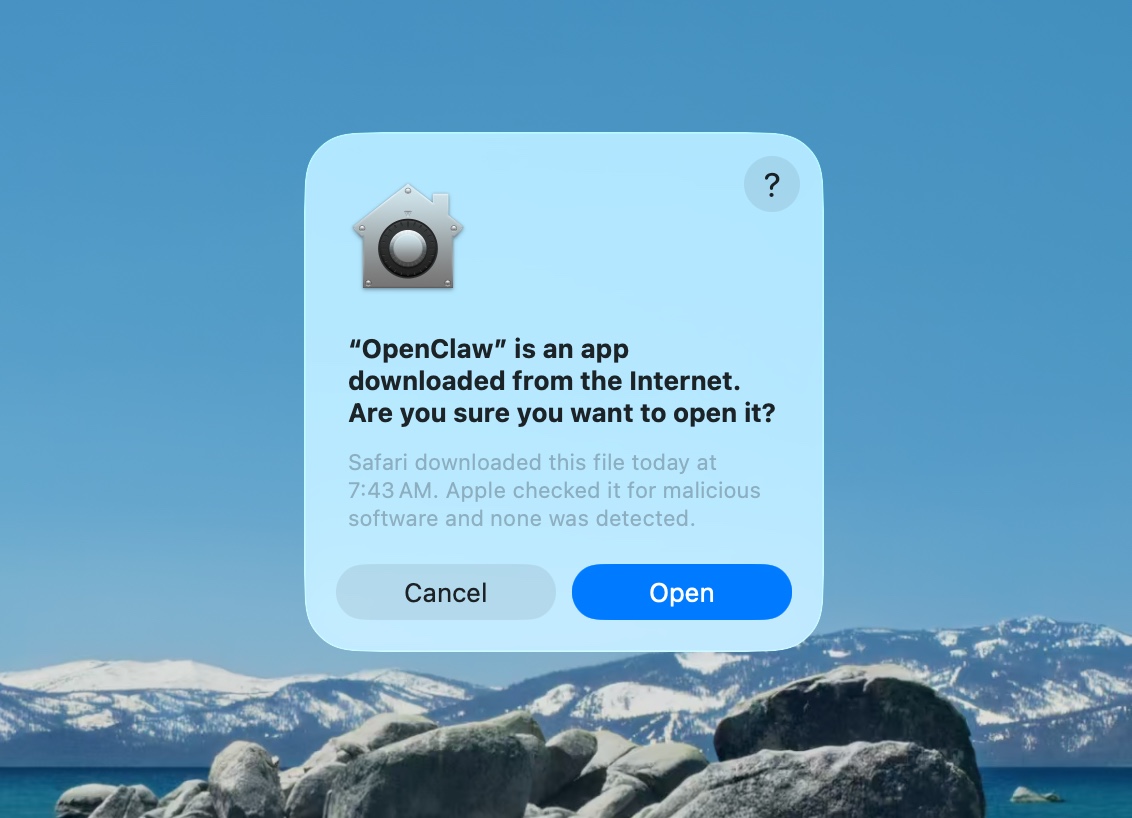

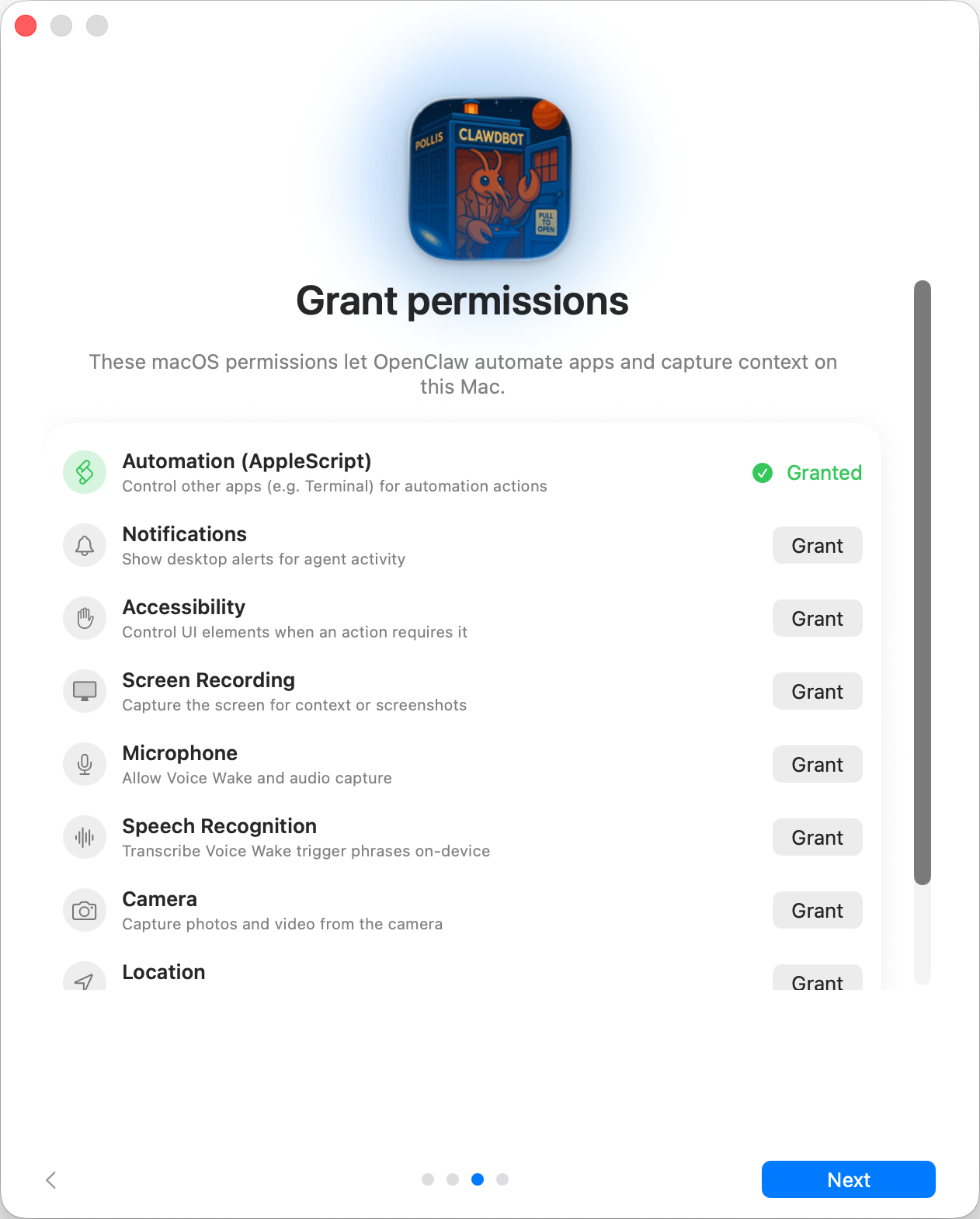

Step 1: Approve macOS warning

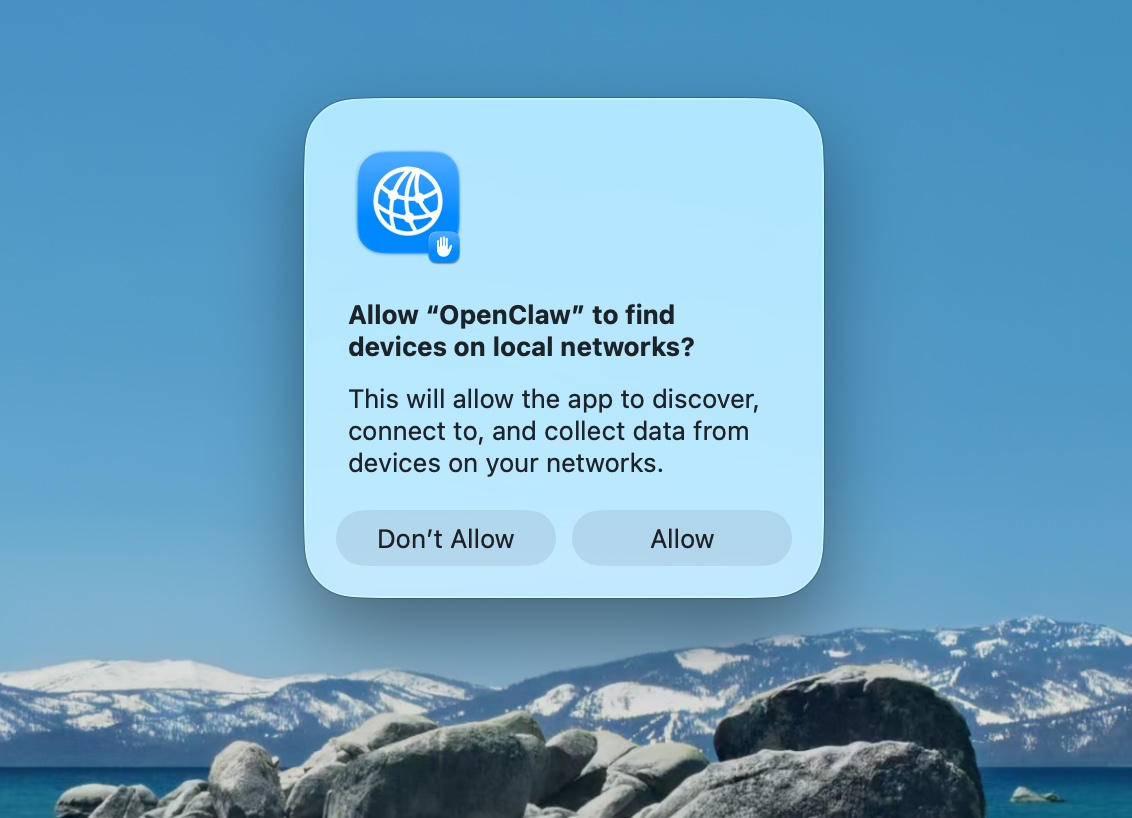

**Step 2: Approve find local networks**

**Step 2: Approve find local networks**

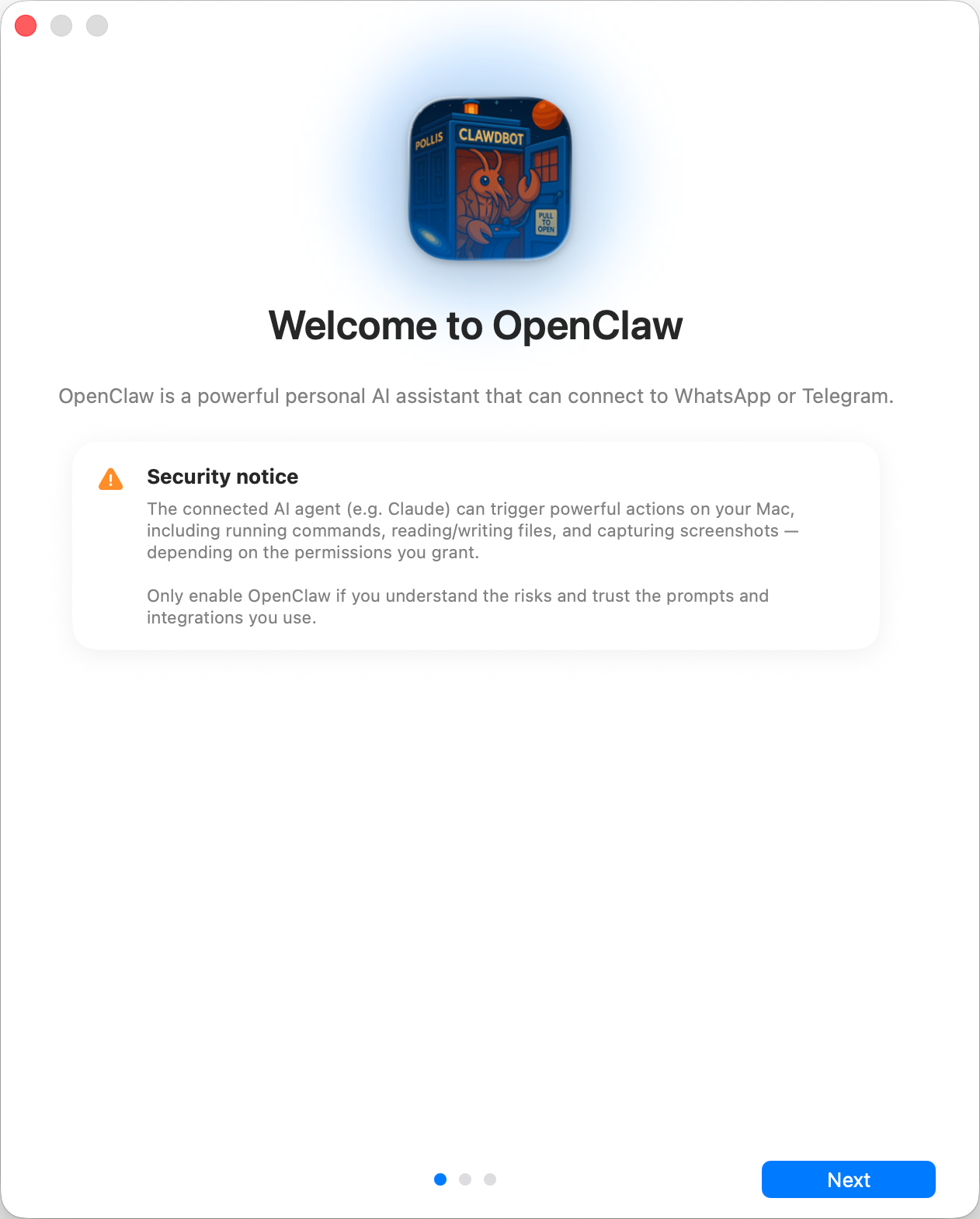

**Step 3: Welcome and security notice**

**Step 3: Welcome and security notice**

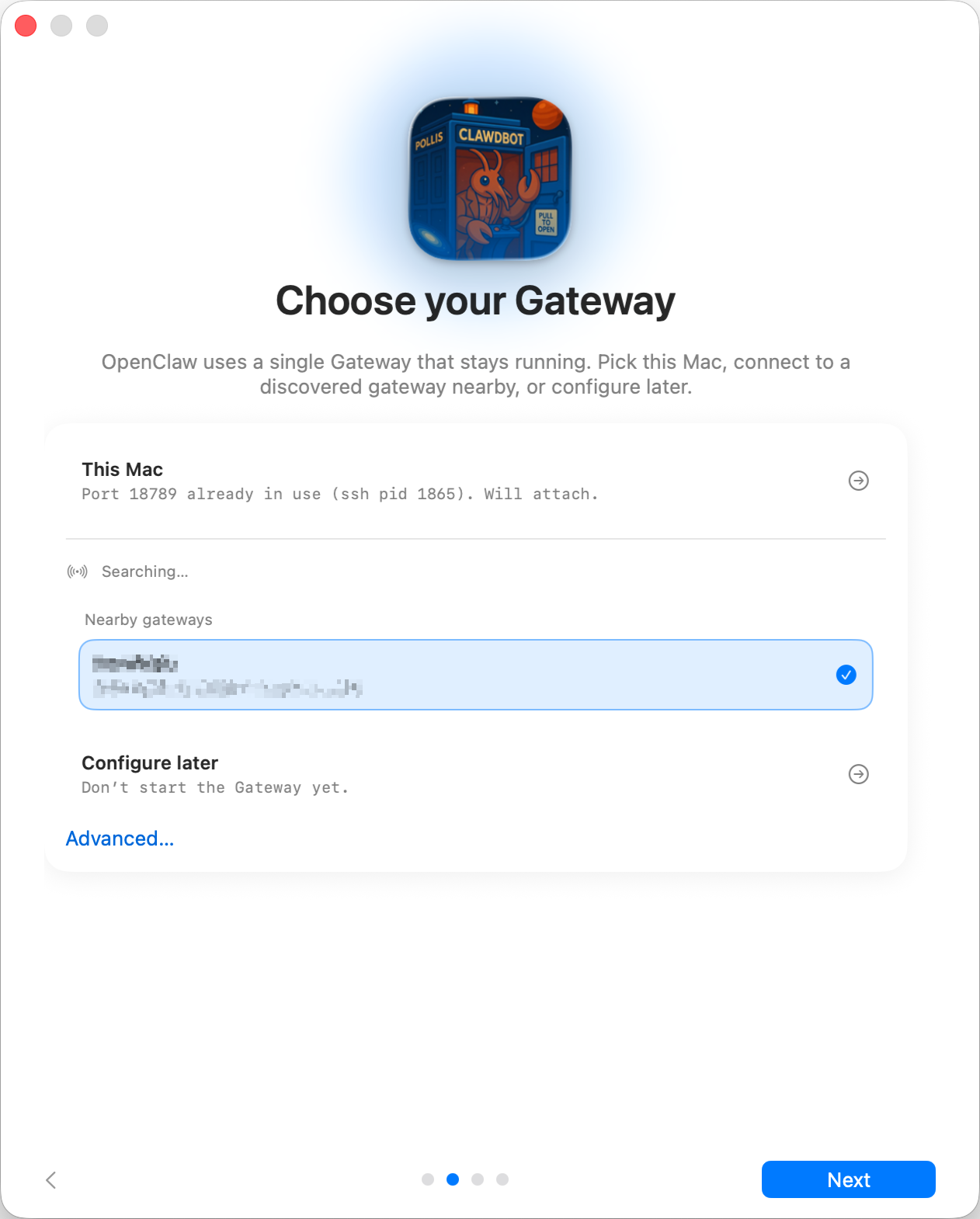

**Step 4: Local vs Remote**

**Step 4: Local vs Remote**

Where does the Gateway run?

- This Mac (Local only): onboarding can run OAuth flows and write credentials locally.

- Remote (over SSH/Tailnet): onboarding does not run OAuth locally; credentials must exist on the gateway host.

- Configure later: skip setup and leave the app unconfigured.

💡 Tip:

Gateway auth tip:

- The wizard now generates a token even for loopback, so local WS clients must authenticate.

- If you disable auth, any local process can connect; use that only on fully trusted machines.

- Use a token for multi‑machine access or non‑loopback binds. Step 5: Permissions

Onboarding requests TCC permissions needed for:

- Automation (AppleScript)

- Notifications

- Accessibility

- Screen Recording

- Microphone

- Speech Recognition

- Camera

- Location Step 6: CLI

ℹ️ Info:

This step is optional The app can install the global

openclawCLI via npm/pnpm so terminal workflows and launchd tasks work out of the box. Step 7: Onboarding Chat (dedicated session)

After setup, the app opens a dedicated onboarding chat session so the agent can introduce itself and guide next steps. This keeps first‑run guidance separate from your normal conversation. See Bootstrapping for what happens on the gateway host during the first agent run.

Building a personal assistant with OpenClaw

OpenClaw is a WhatsApp + Telegram + Discord + iMessage gateway for Pi agents. Plugins add Mattermost. This guide is the “personal assistant” setup: one dedicated WhatsApp number that behaves like your always-on agent.

⚠️ Safety first

You’re putting an agent in a position to:

- run commands on your machine (depending on your Pi tool setup)

- read/write files in your workspace

- send messages back out via WhatsApp/Telegram/Discord/Mattermost (plugin)

Start conservative:

- Always set

channels.whatsapp.allowFrom(never run open-to-the-world on your personal Mac). - Use a dedicated WhatsApp number for the assistant.

- Heartbeats now default to every 30 minutes. Disable until you trust the setup by setting

agents.defaults.heartbeat.every: "0m".

Prerequisites

- OpenClaw installed and onboarded — see Getting Started if you haven’t done this yet

- A second phone number (SIM/eSIM/prepaid) for the assistant

The two-phone setup (recommended)

You want this:

flowchart TB

A["<b>Your Phone (personal)<br></b><br>Your WhatsApp<br>+1-555-YOU"] -- message --> B["<b>Second Phone (assistant)<br></b><br>Assistant WA<br>+1-555-ASSIST"]

B -- linked via QR --> C["<b>Your Mac (openclaw)<br></b><br>Pi agent"]

If you link your personal WhatsApp to OpenClaw, every message to you becomes “agent input”. That’s rarely what you want.

5-minute quick start

- Pair WhatsApp Web (shows QR; scan with the assistant phone):

openclaw channels login

- Start the Gateway (leave it running):

openclaw gateway --port 18789

- Put a minimal config in

~/.openclaw/openclaw.json:

{

channels: { whatsapp: { allowFrom: ["+15555550123"] } },

}

Now message the assistant number from your allowlisted phone.

When onboarding finishes, we auto-open the dashboard and print a clean (non-tokenized) link. If it prompts for auth, paste the token from gateway.auth.token into Control UI settings. To reopen later: openclaw dashboard.

Give the agent a workspace (AGENTS)

OpenClaw reads operating instructions and “memory” from its workspace directory.

By default, OpenClaw uses ~/.openclaw/workspace as the agent workspace, and will create it (plus starter AGENTS.md, SOUL.md, TOOLS.md, IDENTITY.md, USER.md, HEARTBEAT.md) automatically on setup/first agent run. BOOTSTRAP.md is only created when the workspace is brand new (it should not come back after you delete it). MEMORY.md is optional (not auto-created); when present, it is loaded for normal sessions. Subagent sessions only inject AGENTS.md and TOOLS.md.

Tip: treat this folder like OpenClaw’s “memory” and make it a git repo (ideally private) so your AGENTS.md + memory files are backed up. If git is installed, brand-new workspaces are auto-initialized.

openclaw setup

Full workspace layout + backup guide: Agent workspace Memory workflow: Memory

Optional: choose a different workspace with agents.defaults.workspace (supports ~).

{

agent: {

workspace: "~/.openclaw/workspace",

},

}

If you already ship your own workspace files from a repo, you can disable bootstrap file creation entirely:

{

agent: {

skipBootstrap: true,

},

}

The config that turns it into “an assistant”

OpenClaw defaults to a good assistant setup, but you’ll usually want to tune:

- persona/instructions in

SOUL.md - thinking defaults (if desired)

- heartbeats (once you trust it)

Example:

{

logging: { level: "info" },

agent: {

model: "anthropic/claude-opus-4-6",

workspace: "~/.openclaw/workspace",

thinkingDefault: "high",

timeoutSeconds: 1800,

// Start with 0; enable later.

heartbeat: { every: "0m" },

},

channels: {

whatsapp: {

allowFrom: ["+15555550123"],

groups: {

"*": { requireMention: true },

},

},

},

routing: {

groupChat: {

mentionPatterns: ["@openclaw", "openclaw"],

},

},

session: {

scope: "per-sender",

resetTriggers: ["/new", "/reset"],

reset: {

mode: "daily",

atHour: 4,

idleMinutes: 10080,

},

},

}

Sessions and memory

- Session files:

~/.openclaw/agents/<agentId>/sessions/{{SessionId}}.jsonl - Session metadata (token usage, last route, etc):

~/.openclaw/agents/<agentId>/sessions/sessions.json(legacy:~/.openclaw/sessions/sessions.json) /newor/resetstarts a fresh session for that chat (configurable viaresetTriggers). If sent alone, the agent replies with a short hello to confirm the reset./compact [instructions]compacts the session context and reports the remaining context budget.

Heartbeats (proactive mode)

By default, OpenClaw runs a heartbeat every 30 minutes with the prompt:

Read HEARTBEAT.md if it exists (workspace context). Follow it strictly. Do not infer or repeat old tasks from prior chats. If nothing needs attention, reply HEARTBEAT_OK.

Set agents.defaults.heartbeat.every: "0m" to disable.

- If

HEARTBEAT.mdexists but is effectively empty (only blank lines and markdown headers like# Heading), OpenClaw skips the heartbeat run to save API calls. - If the file is missing, the heartbeat still runs and the model decides what to do.

- If the agent replies with

HEARTBEAT_OK(optionally with short padding; seeagents.defaults.heartbeat.ackMaxChars), OpenClaw suppresses outbound delivery for that heartbeat. - Heartbeats run full agent turns — shorter intervals burn more tokens.

{

agent: {

heartbeat: { every: "30m" },

},

}

Media in and out

Inbound attachments (images/audio/docs) can be surfaced to your command via templates:

{{MediaPath}}(local temp file path){{MediaUrl}}(pseudo-URL){{Transcript}}(if audio transcription is enabled)

Outbound attachments from the agent: include MEDIA:<path-or-url> on its own line (no spaces). Example:

Here’s the screenshot.

MEDIA:https://example.com/screenshot.png

OpenClaw extracts these and sends them as media alongside the text.

Operations checklist

openclaw status # local status (creds, sessions, queued events)

openclaw status --all # full diagnosis (read-only, pasteable)

openclaw status --deep # adds gateway health probes (Telegram + Discord)

openclaw health --json # gateway health snapshot (WS)

Logs live under /tmp/openclaw/ (default: openclaw-YYYY-MM-DD.log).

Next steps

- WebChat: WebChat

- Gateway ops: Gateway runbook

- Cron + wakeups: Cron jobs

- macOS menu bar companion: OpenClaw macOS app

- iOS node app: iOS app

- Android node app: Android app

- Windows status: Windows (WSL2)

- Linux status: Linux app

- Security: Security

Install

Already followed Getting Started? You’re all set — this page is for alternative install methods, platform-specific instructions, and maintenance.

System requirements

- Node 22+ (the installer script will install it if missing)

- macOS, Linux, or Windows

pnpmonly if you build from source

📝 Note:

On Windows, we strongly recommend running OpenClaw under WSL2.

Install methods

💡 Tip:

The installer script is the recommended way to install OpenClaw. It handles Node detection, installation, and onboarding in one step.

Installer script

Downloads the CLI, installs it globally via npm, and launches the onboarding wizard.

**macOS / Linux / WSL2:**

curl -fsSL https://openclaw.ai/install.sh | bash

```

**Windows (PowerShell):**

```powershell

iwr -useb https://openclaw.ai/install.ps1 | iex

```

That's it — the script handles Node detection, installation, and onboarding.

To skip onboarding and just install the binary:

**macOS / Linux / WSL2:**

```bash

curl -fsSL https://openclaw.ai/install.sh | bash -s -- --no-onboard

```

**Windows (PowerShell):**

```powershell

& ([scriptblock]::Create((iwr -useb https://openclaw.ai/install.ps1))) -NoOnboard

```

For all flags, env vars, and CI/automation options, see [Installer internals](./install/installer.md).

</details>

<details>

<summary>npm / pnpm</summary>

If you already have Node 22+ and prefer to manage the install yourself:

**npm:**

```bash

npm install -g openclaw@latest

openclaw onboard --install-daemon

```

<details>

<summary>sharp build errors?</summary>

If you have libvips installed globally (common on macOS via Homebrew) and `sharp` fails, force prebuilt binaries:

```bash

SHARP_IGNORE_GLOBAL_LIBVIPS=1 npm install -g openclaw@latest

```

If you see `sharp: Please add node-gyp to your dependencies`, either install build tooling (macOS: Xcode CLT + `npm install -g node-gyp`) or use the env var above.

</details>

**pnpm:**

```bash

pnpm add -g openclaw@latest

pnpm approve-builds -g # approve openclaw, node-llama-cpp, sharp, etc.

openclaw onboard --install-daemon

```

> **📝 Note:**

>

> pnpm requires explicit approval for packages with build scripts. After the first install shows the "Ignored build scripts" warning, run `pnpm approve-builds -g` and select the listed packages.

</details>

<details>

<summary>From source</summary>

For contributors or anyone who wants to run from a local checkout.

**Step 1: Clone and build**

Clone the [OpenClaw repo](https://github.com/openclaw/openclaw) and build:

```bash

git clone https://github.com/openclaw/openclaw.git

cd openclaw

pnpm install

pnpm ui:build

pnpm build

```

**Step 2: Link the CLI**

Make the `openclaw` command available globally:

```bash

pnpm link --global

```

Alternatively, skip the link and run commands via `pnpm openclaw ...` from inside the repo.

**Step 3: Run onboarding**

```bash

openclaw onboard --install-daemon

```

For deeper development workflows, see [Setup](./start/setup.md).

</details>

## Other install methods

- [**Docker**](./install/docker.md): Containerized or headless deployments.

- [**Podman**](./install/podman.md): Rootless container: run `setup-podman.sh` once, then the launch script.

- [**Nix**](./install/nix.md): Declarative install via Nix.

- [**Ansible**](./install/ansible.md): Automated fleet provisioning.

- [**Bun**](./install/bun.md): CLI-only usage via the Bun runtime.

## After install

Verify everything is working:

```bash

openclaw doctor # check for config issues

openclaw status # gateway status

openclaw dashboard # open the browser UI

If you need custom runtime paths, use:

OPENCLAW_HOMEfor home-directory based internal pathsOPENCLAW_STATE_DIRfor mutable state locationOPENCLAW_CONFIG_PATHfor config file location

See Environment vars for precedence and full details.

Troubleshooting: openclaw not found

PATH diagnosis and fix

Quick diagnosis:

node -v

npm -v

npm prefix -g

echo "$PATH"

If $(npm prefix -g)/bin (macOS/Linux) or $(npm prefix -g) (Windows) is not in your $PATH, your shell can’t find global npm binaries (including openclaw).

Fix — add it to your shell startup file (~/.zshrc or ~/.bashrc):

export PATH="$(npm prefix -g)/bin:$PATH"

On Windows, add the output of npm prefix -g to your PATH.

Then open a new terminal (or rehash in zsh / hash -r in bash).

Update / uninstall

Installer internals

OpenClaw ships three installer scripts, served from openclaw.ai.

| Script | Platform | What it does |

|---|---|---|

install.sh | macOS / Linux / WSL | Installs Node if needed, installs OpenClaw via npm (default) or git, and can run onboarding. |

install-cli.sh | macOS / Linux / WSL | Installs Node + OpenClaw into a local prefix (~/.openclaw). No root required. |

install.ps1 | Windows (PowerShell) | Installs Node if needed, installs OpenClaw via npm (default) or git, and can run onboarding. |

Quick commands

install.sh:

curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install.sh | bash

```

```bash

curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install.sh | bash -s -- --help

```

**install-cli.sh:**

```bash

curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install-cli.sh | bash

```

```bash

curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install-cli.sh | bash -s -- --help

```

**install.ps1:**

```powershell

iwr -useb https://openclaw.ai/install.ps1 | iex

```

```powershell

& ([scriptblock]::Create((iwr -useb https://openclaw.ai/install.ps1))) -Tag beta -NoOnboard -DryRun

```

> **📝 Note:**

>

> If install succeeds but `openclaw` is not found in a new terminal, see [Node.js troubleshooting](./install/node#troubleshooting.md).

---

## install.sh

> **💡 Tip:**

>

> Recommended for most interactive installs on macOS/Linux/WSL.

### Flow (install.sh)

**Step 1: Detect OS**

Supports macOS and Linux (including WSL). If macOS is detected, installs Homebrew if missing.

**Step 2: Ensure Node.js 22+**

Checks Node version and installs Node 22 if needed (Homebrew on macOS, NodeSource setup scripts on Linux apt/dnf/yum).

**Step 3: Ensure Git**

Installs Git if missing.

**Step 4: Install OpenClaw**

- `npm` method (default): global npm install

- `git` method: clone/update repo, install deps with pnpm, build, then install wrapper at `~/.local/bin/openclaw`

**Step 5: Post-install tasks**

- Runs `openclaw doctor --non-interactive` on upgrades and git installs (best effort)

- Attempts onboarding when appropriate (TTY available, onboarding not disabled, and bootstrap/config checks pass)

- Defaults `SHARP_IGNORE_GLOBAL_LIBVIPS=1`

### Source checkout detection

If run inside an OpenClaw checkout (`package.json` + `pnpm-workspace.yaml`), the script offers:

- use checkout (`git`), or

- use global install (`npm`)

If no TTY is available and no install method is set, it defaults to `npm` and warns.

The script exits with code `2` for invalid method selection or invalid `--install-method` values.

### Examples (install.sh)

**Default:**

```bash

curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install.sh | bash

```

**Skip onboarding:**

```bash

curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install.sh | bash -s -- --no-onboard

```

**Git install:**

```bash

curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install.sh | bash -s -- --install-method git

```

**Dry run:**

```bash

curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install.sh | bash -s -- --dry-run

```

<details>

<summary>Flags reference</summary>

| Flag | Description |

| ------------------------------- | ---------------------------------------------------------- |

| `--install-method npm\|git` | Choose install method (default: `npm`). Alias: `--method` |

| `--npm` | Shortcut for npm method |

| `--git` | Shortcut for git method. Alias: `--github` |

| `--version <version\|dist-tag>` | npm version or dist-tag (default: `latest`) |

| `--beta` | Use beta dist-tag if available, else fallback to `latest` |

| `--git-dir <path>` | Checkout directory (default: `~/openclaw`). Alias: `--dir` |

| `--no-git-update` | Skip `git pull` for existing checkout |

| `--no-prompt` | Disable prompts |

| `--no-onboard` | Skip onboarding |

| `--onboard` | Enable onboarding |

| `--dry-run` | Print actions without applying changes |

| `--verbose` | Enable debug output (`set -x`, npm notice-level logs) |

| `--help` | Show usage (`-h`) |

</details>

<details>

<summary>Environment variables reference</summary>

| Variable | Description |

| ------------------------------------------- | --------------------------------------------- |

| `OPENCLAW_INSTALL_METHOD=git\|npm` | Install method |

| `OPENCLAW_VERSION=latest\|next\|<semver>` | npm version or dist-tag |

| `OPENCLAW_BETA=0\|1` | Use beta if available |

| `OPENCLAW_GIT_DIR=<path>` | Checkout directory |

| `OPENCLAW_GIT_UPDATE=0\|1` | Toggle git updates |

| `OPENCLAW_NO_PROMPT=1` | Disable prompts |

| `OPENCLAW_NO_ONBOARD=1` | Skip onboarding |

| `OPENCLAW_DRY_RUN=1` | Dry run mode |

| `OPENCLAW_VERBOSE=1` | Debug mode |

| `OPENCLAW_NPM_LOGLEVEL=error\|warn\|notice` | npm log level |

| `SHARP_IGNORE_GLOBAL_LIBVIPS=0\|1` | Control sharp/libvips behavior (default: `1`) |

</details>

---

## install-cli.sh

> **ℹ️ Info:**

>

> Designed for environments where you want everything under a local prefix (default `~/.openclaw`) and no system Node dependency.

### Flow (install-cli.sh)

**Step 6: Install local Node runtime**

Downloads Node tarball (default `22.22.0`) to `<prefix>/tools/node-v<version>` and verifies SHA-256.

**Step 7: Ensure Git**

If Git is missing, attempts install via apt/dnf/yum on Linux or Homebrew on macOS.

**Step 8: Install OpenClaw under prefix**

Installs with npm using `--prefix <prefix>`, then writes wrapper to `<prefix>/bin/openclaw`.

### Examples (install-cli.sh)

**Default:**

```bash

curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install-cli.sh | bash

```

**Custom prefix + version:**

```bash

curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install-cli.sh | bash -s -- --prefix /opt/openclaw --version latest

```

**Automation JSON output:**

```bash

curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install-cli.sh | bash -s -- --json --prefix /opt/openclaw

```

**Run onboarding:**

```bash

curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install-cli.sh | bash -s -- --onboard

```

<details>

<summary>Flags reference</summary>

| Flag | Description |

| ---------------------- | ------------------------------------------------------------------------------- |

| `--prefix <path>` | Install prefix (default: `~/.openclaw`) |

| `--version <ver>` | OpenClaw version or dist-tag (default: `latest`) |

| `--node-version <ver>` | Node version (default: `22.22.0`) |

| `--json` | Emit NDJSON events |

| `--onboard` | Run `openclaw onboard` after install |

| `--no-onboard` | Skip onboarding (default) |

| `--set-npm-prefix` | On Linux, force npm prefix to `~/.npm-global` if current prefix is not writable |

| `--help` | Show usage (`-h`) |

</details>

<details>

<summary>Environment variables reference</summary>

| Variable | Description |

| ------------------------------------------- | --------------------------------------------------------------------------------- |

| `OPENCLAW_PREFIX=<path>` | Install prefix |

| `OPENCLAW_VERSION=<ver>` | OpenClaw version or dist-tag |

| `OPENCLAW_NODE_VERSION=<ver>` | Node version |

| `OPENCLAW_NO_ONBOARD=1` | Skip onboarding |

| `OPENCLAW_NPM_LOGLEVEL=error\|warn\|notice` | npm log level |

| `OPENCLAW_GIT_DIR=<path>` | Legacy cleanup lookup path (used when removing old `Peekaboo` submodule checkout) |

| `SHARP_IGNORE_GLOBAL_LIBVIPS=0\|1` | Control sharp/libvips behavior (default: `1`) |

</details>

---

## install.ps1

### Flow (install.ps1)

**Step 9: Ensure PowerShell + Windows environment**

Requires PowerShell 5+.

**Step 10: Ensure Node.js 22+**

If missing, attempts install via winget, then Chocolatey, then Scoop.

**Step 11: Install OpenClaw**

- `npm` method (default): global npm install using selected `-Tag`

- `git` method: clone/update repo, install/build with pnpm, and install wrapper at `%USERPROFILE%\.local\bin\openclaw.cmd`

**Step 12: Post-install tasks**

Adds needed bin directory to user PATH when possible, then runs `openclaw doctor --non-interactive` on upgrades and git installs (best effort).

### Examples (install.ps1)

**Default:**

```powershell

iwr -useb https://openclaw.ai/install.ps1 | iex

```

**Git install:**

```powershell

& ([scriptblock]::Create((iwr -useb https://openclaw.ai/install.ps1))) -InstallMethod git

```

**Custom git directory:**

```powershell

& ([scriptblock]::Create((iwr -useb https://openclaw.ai/install.ps1))) -InstallMethod git -GitDir "C:\openclaw"

```

**Dry run:**

```powershell

& ([scriptblock]::Create((iwr -useb https://openclaw.ai/install.ps1))) -DryRun

```

**Debug trace:**

```powershell

# install.ps1 has no dedicated -Verbose flag yet.

Set-PSDebug -Trace 1

& ([scriptblock]::Create((iwr -useb https://openclaw.ai/install.ps1))) -NoOnboard

Set-PSDebug -Trace 0

```

<details>

<summary>Flags reference</summary>

| Flag | Description |

| ------------------------- | ------------------------------------------------------ |

| `-InstallMethod npm\|git` | Install method (default: `npm`) |

| `-Tag <tag>` | npm dist-tag (default: `latest`) |

| `-GitDir <path>` | Checkout directory (default: `%USERPROFILE%\openclaw`) |

| `-NoOnboard` | Skip onboarding |

| `-NoGitUpdate` | Skip `git pull` |

| `-DryRun` | Print actions only |

</details>

<details>

<summary>Environment variables reference</summary>

| Variable | Description |

| ---------------------------------- | ------------------ |

| `OPENCLAW_INSTALL_METHOD=git\|npm` | Install method |

| `OPENCLAW_GIT_DIR=<path>` | Checkout directory |

| `OPENCLAW_NO_ONBOARD=1` | Skip onboarding |

| `OPENCLAW_GIT_UPDATE=0` | Disable git pull |

| `OPENCLAW_DRY_RUN=1` | Dry run mode |

</details>

> **📝 Note:**

>

> If `-InstallMethod git` is used and Git is missing, the script exits and prints the Git for Windows link.

---

## CI and automation

Use non-interactive flags/env vars for predictable runs.

**install.sh (non-interactive npm):**

```bash

curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install.sh | bash -s -- --no-prompt --no-onboard

```

**install.sh (non-interactive git):**

```bash

OPENCLAW_INSTALL_METHOD=git OPENCLAW_NO_PROMPT=1 \

curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install.sh | bash

```

**install-cli.sh (JSON):**

```bash

curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install-cli.sh | bash -s -- --json --prefix /opt/openclaw

```

**install.ps1 (skip onboarding):**

```powershell

& ([scriptblock]::Create((iwr -useb https://openclaw.ai/install.ps1))) -NoOnboard

```

---

## Troubleshooting

<details>

<summary>Why is Git required?</summary>

Git is required for `git` install method. For `npm` installs, Git is still checked/installed to avoid `spawn git ENOENT` failures when dependencies use git URLs.

</details>

<details>

<summary>Why does npm hit EACCES on Linux?</summary>

Some Linux setups point npm global prefix to root-owned paths. `install.sh` can switch prefix to `~/.npm-global` and append PATH exports to shell rc files (when those files exist).

</details>

<details>

<summary>sharp/libvips issues</summary>

The scripts default `SHARP_IGNORE_GLOBAL_LIBVIPS=1` to avoid sharp building against system libvips. To override:

```bash

SHARP_IGNORE_GLOBAL_LIBVIPS=0 curl -fsSL --proto '=https' --tlsv1.2 https://openclaw.ai/install.sh | bash

```

</details>

<details>

<summary>Windows: </summary>

Install Git for Windows, reopen PowerShell, rerun installer.

</details>

<details>

<summary>Windows: </summary>

Run `npm config get prefix`, append `\bin`, add that directory to user PATH, then reopen PowerShell.

</details>

<details>

<summary>Windows: how to get verbose installer output</summary>

`install.ps1` does not currently expose a `-Verbose` switch.

Use PowerShell tracing for script-level diagnostics:

```powershell

Set-PSDebug -Trace 1

& ([scriptblock]::Create((iwr -useb https://openclaw.ai/install.ps1))) -NoOnboard

Set-PSDebug -Trace 0

```

</details>

<details>

<summary>openclaw not found after install</summary>

Usually a PATH issue. See [Node.js troubleshooting](./install/node#troubleshooting.md).

</details>

Docker (optional)

Docker is optional. Use it only if you want a containerized gateway or to validate the Docker flow.

Is Docker right for me?

- Yes: you want an isolated, throwaway gateway environment or to run OpenClaw on a host without local installs.

- No: you’re running on your own machine and just want the fastest dev loop. Use the normal install flow instead.

- Sandboxing note: agent sandboxing uses Docker too, but it does not require the full gateway to run in Docker. See Sandboxing.

This guide covers:

- Containerized Gateway (full OpenClaw in Docker)

- Per-session Agent Sandbox (host gateway + Docker-isolated agent tools)

Sandboxing details: Sandboxing

Requirements

- Docker Desktop (or Docker Engine) + Docker Compose v2

- Enough disk for images + logs

Containerized Gateway (Docker Compose)

Quick start (recommended)

From repo root:

./docker-setup.sh

This script:

- builds the gateway image

- runs the onboarding wizard

- prints optional provider setup hints

- starts the gateway via Docker Compose

- generates a gateway token and writes it to

.env

Optional env vars:

OPENCLAW_DOCKER_APT_PACKAGES— install extra apt packages during buildOPENCLAW_EXTRA_MOUNTS— add extra host bind mountsOPENCLAW_HOME_VOLUME— persist/home/nodein a named volume

After it finishes:

- Open

http://127.0.0.1:18789/in your browser. - Paste the token into the Control UI (Settings → token).

- Need the URL again? Run

docker compose run --rm openclaw-cli dashboard --no-open.

It writes config/workspace on the host:

~/.openclaw/~/.openclaw/workspace

Running on a VPS? See Hetzner (Docker VPS).

Shell Helpers (optional)

For easier day-to-day Docker management, install ClawDock:

mkdir -p ~/.clawdock && curl -sL https://raw.githubusercontent.com/openclaw/openclaw/main/scripts/shell-helpers/clawdock-helpers.sh -o ~/.clawdock/clawdock-helpers.sh

Add to your shell config (zsh):

echo 'source ~/.clawdock/clawdock-helpers.sh' >> ~/.zshrc && source ~/.zshrc

Then use clawdock-start, clawdock-stop, clawdock-dashboard, etc. Run clawdock-help for all commands.

See ClawDock Helper README for details.

Manual flow (compose)

docker build -t openclaw:local -f Dockerfile .

docker compose run --rm openclaw-cli onboard

docker compose up -d openclaw-gateway

Note: run docker compose ... from the repo root. If you enabled

OPENCLAW_EXTRA_MOUNTS or OPENCLAW_HOME_VOLUME, the setup script writes

docker-compose.extra.yml; include it when running Compose elsewhere:

docker compose -f docker-compose.yml -f docker-compose.extra.yml <command>

Control UI token + pairing (Docker)

If you see “unauthorized” or “disconnected (1008): pairing required”, fetch a fresh dashboard link and approve the browser device:

docker compose run --rm openclaw-cli dashboard --no-open

docker compose run --rm openclaw-cli devices list

docker compose run --rm openclaw-cli devices approve <requestId>

More detail: Dashboard, Devices.

Extra mounts (optional)

If you want to mount additional host directories into the containers, set

OPENCLAW_EXTRA_MOUNTS before running docker-setup.sh. This accepts a

comma-separated list of Docker bind mounts and applies them to both

openclaw-gateway and openclaw-cli by generating docker-compose.extra.yml.

Example:

export OPENCLAW_EXTRA_MOUNTS="$HOME/.codex:/home/node/.codex:ro,$HOME/github:/home/node/github:rw"

./docker-setup.sh

Notes:

- Paths must be shared with Docker Desktop on macOS/Windows.

- If you edit

OPENCLAW_EXTRA_MOUNTS, rerundocker-setup.shto regenerate the extra compose file. docker-compose.extra.ymlis generated. Don’t hand-edit it.

Persist the entire container home (optional)

If you want /home/node to persist across container recreation, set a named

volume via OPENCLAW_HOME_VOLUME. This creates a Docker volume and mounts it at

/home/node, while keeping the standard config/workspace bind mounts. Use a

named volume here (not a bind path); for bind mounts, use

OPENCLAW_EXTRA_MOUNTS.

Example:

export OPENCLAW_HOME_VOLUME="openclaw_home"

./docker-setup.sh

You can combine this with extra mounts:

export OPENCLAW_HOME_VOLUME="openclaw_home"

export OPENCLAW_EXTRA_MOUNTS="$HOME/.codex:/home/node/.codex:ro,$HOME/github:/home/node/github:rw"

./docker-setup.sh

Notes:

- If you change

OPENCLAW_HOME_VOLUME, rerundocker-setup.shto regenerate the extra compose file. - The named volume persists until removed with

docker volume rm <name>.

Install extra apt packages (optional)

If you need system packages inside the image (for example, build tools or media

libraries), set OPENCLAW_DOCKER_APT_PACKAGES before running docker-setup.sh.

This installs the packages during the image build, so they persist even if the

container is deleted.

Example:

export OPENCLAW_DOCKER_APT_PACKAGES="ffmpeg build-essential"

./docker-setup.sh

Notes:

- This accepts a space-separated list of apt package names.

- If you change

OPENCLAW_DOCKER_APT_PACKAGES, rerundocker-setup.shto rebuild the image.

Power-user / full-featured container (opt-in)

The default Docker image is security-first and runs as the non-root node

user. This keeps the attack surface small, but it means:

- no system package installs at runtime

- no Homebrew by default

- no bundled Chromium/Playwright browsers

If you want a more full-featured container, use these opt-in knobs:

- Persist

/home/nodeso browser downloads and tool caches survive:

export OPENCLAW_HOME_VOLUME="openclaw_home"

./docker-setup.sh

- Bake system deps into the image (repeatable + persistent):

export OPENCLAW_DOCKER_APT_PACKAGES="git curl jq"

./docker-setup.sh

- Install Playwright browsers without

npx(avoids npm override conflicts):

docker compose run --rm openclaw-cli \

node /app/node_modules/playwright-core/cli.js install chromium

If you need Playwright to install system deps, rebuild the image with

OPENCLAW_DOCKER_APT_PACKAGES instead of using --with-deps at runtime.

- Persist Playwright browser downloads:

- Set

PLAYWRIGHT_BROWSERS_PATH=/home/node/.cache/ms-playwrightindocker-compose.yml. - Ensure

/home/nodepersists viaOPENCLAW_HOME_VOLUME, or mount/home/node/.cache/ms-playwrightviaOPENCLAW_EXTRA_MOUNTS.

Permissions + EACCES

The image runs as node (uid 1000). If you see permission errors on

/home/node/.openclaw, make sure your host bind mounts are owned by uid 1000.

Example (Linux host):

sudo chown -R 1000:1000 /path/to/openclaw-config /path/to/openclaw-workspace

If you choose to run as root for convenience, you accept the security tradeoff.

Faster rebuilds (recommended)

To speed up rebuilds, order your Dockerfile so dependency layers are cached.

This avoids re-running pnpm install unless lockfiles change:

FROM node:22-bookworm

# Install Bun (required for build scripts)

RUN curl -fsSL https://bun.sh/install | bash

ENV PATH="/root/.bun/bin:${PATH}"

RUN corepack enable

WORKDIR /app

# Cache dependencies unless package metadata changes

COPY package.json pnpm-lock.yaml pnpm-workspace.yaml .npmrc ./

COPY ui/package.json ./ui/package.json

COPY scripts ./scripts

RUN pnpm install --frozen-lockfile

COPY . .

RUN pnpm build

RUN pnpm ui:install

RUN pnpm ui:build

ENV NODE_ENV=production

CMD ["node","dist/index.js"]

Channel setup (optional)

Use the CLI container to configure channels, then restart the gateway if needed.

WhatsApp (QR):

docker compose run --rm openclaw-cli channels login

Telegram (bot token):

docker compose run --rm openclaw-cli channels add --channel telegram --token "<token>"

Discord (bot token):

docker compose run --rm openclaw-cli channels add --channel discord --token "<token>"

Docs: WhatsApp, Telegram, Discord

OpenAI Codex OAuth (headless Docker)

If you pick OpenAI Codex OAuth in the wizard, it opens a browser URL and tries

to capture a callback on http://127.0.0.1:1455/auth/callback. In Docker or

headless setups that callback can show a browser error. Copy the full redirect

URL you land on and paste it back into the wizard to finish auth.

Health check

docker compose exec openclaw-gateway node dist/index.js health --token "$OPENCLAW_GATEWAY_TOKEN"

E2E smoke test (Docker)

scripts/e2e/onboard-docker.sh

QR import smoke test (Docker)

pnpm test:docker:qr

Notes

- Gateway bind defaults to

lanfor container use. - Dockerfile CMD uses

--allow-unconfigured; mounted config withgateway.modenotlocalwill still start. Override CMD to enforce the guard. - The gateway container is the source of truth for sessions (

~/.openclaw/agents/<agentId>/sessions/).

Agent Sandbox (host gateway + Docker tools)

Deep dive: Sandboxing

What it does

When agents.defaults.sandbox is enabled, non-main sessions run tools inside a Docker

container. The gateway stays on your host, but the tool execution is isolated:

- scope:

"agent"by default (one container + workspace per agent) - scope:

"session"for per-session isolation - per-scope workspace folder mounted at

/workspace - optional agent workspace access (

agents.defaults.sandbox.workspaceAccess) - allow/deny tool policy (deny wins)

- inbound media is copied into the active sandbox workspace (

media/inbound/*) so tools can read it (withworkspaceAccess: "rw", this lands in the agent workspace)

Warning: scope: "shared" disables cross-session isolation. All sessions share

one container and one workspace.

Per-agent sandbox profiles (multi-agent)

If you use multi-agent routing, each agent can override sandbox + tool settings:

agents.list[].sandbox and agents.list[].tools (plus agents.list[].tools.sandbox.tools). This lets you run

mixed access levels in one gateway:

- Full access (personal agent)

- Read-only tools + read-only workspace (family/work agent)

- No filesystem/shell tools (public agent)

See Multi-Agent Sandbox & Tools for examples, precedence, and troubleshooting.

Default behavior

- Image:

openclaw-sandbox:bookworm-slim - One container per agent

- Agent workspace access:

workspaceAccess: "none"(default) uses~/.openclaw/sandboxes"ro"keeps the sandbox workspace at/workspaceand mounts the agent workspace read-only at/agent(disableswrite/edit/apply_patch)"rw"mounts the agent workspace read/write at/workspace

- Auto-prune: idle > 24h OR age > 7d

- Network:

noneby default (explicitly opt-in if you need egress) - Default allow:

exec,process,read,write,edit,sessions_list,sessions_history,sessions_send,sessions_spawn,session_status - Default deny:

browser,canvas,nodes,cron,discord,gateway

Enable sandboxing

If you plan to install packages in setupCommand, note:

- Default

docker.networkis"none"(no egress). readOnlyRoot: trueblocks package installs.usermust be root forapt-get(omituseror setuser: "0:0"). OpenClaw auto-recreates containers whensetupCommand(or docker config) changes unless the container was recently used (within ~5 minutes). Hot containers log a warning with the exactopenclaw sandbox recreate ...command.

{

agents: {

defaults: {

sandbox: {

mode: "non-main", // off | non-main | all

scope: "agent", // session | agent | shared (agent is default)

workspaceAccess: "none", // none | ro | rw

workspaceRoot: "~/.openclaw/sandboxes",

docker: {

image: "openclaw-sandbox:bookworm-slim",

workdir: "/workspace",

readOnlyRoot: true,

tmpfs: ["/tmp", "/var/tmp", "/run"],

network: "none",

user: "1000:1000",

capDrop: ["ALL"],

env: { LANG: "C.UTF-8" },

setupCommand: "apt-get update && apt-get install -y git curl jq",

pidsLimit: 256,

memory: "1g",

memorySwap: "2g",

cpus: 1,

ulimits: {

nofile: { soft: 1024, hard: 2048 },

nproc: 256,

},

seccompProfile: "/path/to/seccomp.json",

apparmorProfile: "openclaw-sandbox",

dns: ["1.1.1.1", "8.8.8.8"],

extraHosts: ["internal.service:10.0.0.5"],

},

prune: {

idleHours: 24, // 0 disables idle pruning

maxAgeDays: 7, // 0 disables max-age pruning

},

},

},

},

tools: {

sandbox: {

tools: {

allow: [

"exec",

"process",

"read",

"write",

"edit",

"sessions_list",

"sessions_history",

"sessions_send",

"sessions_spawn",

"session_status",

],

deny: ["browser", "canvas", "nodes", "cron", "discord", "gateway"],

},

},

},

}

Hardening knobs live under agents.defaults.sandbox.docker:

network, user, pidsLimit, memory, memorySwap, cpus, ulimits,

seccompProfile, apparmorProfile, dns, extraHosts.

Multi-agent: override agents.defaults.sandbox.{docker,browser,prune}.* per agent via agents.list[].sandbox.{docker,browser,prune}.*

(ignored when agents.defaults.sandbox.scope / agents.list[].sandbox.scope is "shared").

Build the default sandbox image

scripts/sandbox-setup.sh

This builds openclaw-sandbox:bookworm-slim using Dockerfile.sandbox.

Sandbox common image (optional)

If you want a sandbox image with common build tooling (Node, Go, Rust, etc.), build the common image:

scripts/sandbox-common-setup.sh

This builds openclaw-sandbox-common:bookworm-slim. To use it:

{

agents: {

defaults: {

sandbox: { docker: { image: "openclaw-sandbox-common:bookworm-slim" } },

},

},

}

Sandbox browser image

To run the browser tool inside the sandbox, build the browser image:

scripts/sandbox-browser-setup.sh

This builds openclaw-sandbox-browser:bookworm-slim using

Dockerfile.sandbox-browser. The container runs Chromium with CDP enabled and

an optional noVNC observer (headful via Xvfb).

Notes:

- Headful (Xvfb) reduces bot blocking vs headless.

- Headless can still be used by setting

agents.defaults.sandbox.browser.headless=true. - No full desktop environment (GNOME) is needed; Xvfb provides the display.

Use config:

{

agents: {

defaults: {

sandbox: {

browser: { enabled: true },

},

},

},

}

Custom browser image:

{

agents: {

defaults: {

sandbox: { browser: { image: "my-openclaw-browser" } },

},

},

}

When enabled, the agent receives:

- a sandbox browser control URL (for the

browsertool) - a noVNC URL (if enabled and headless=false)

Remember: if you use an allowlist for tools, add browser (and remove it from

deny) or the tool remains blocked.

Prune rules (agents.defaults.sandbox.prune) apply to browser containers too.

Custom sandbox image

Build your own image and point config to it:

docker build -t my-openclaw-sbx -f Dockerfile.sandbox .

{

agents: {

defaults: {

sandbox: { docker: { image: "my-openclaw-sbx" } },

},

},

}

Tool policy (allow/deny)

denywins overallow.- If

allowis empty: all tools (except deny) are available. - If

allowis non-empty: only tools inalloware available (minus deny).

Pruning strategy

Two knobs:

prune.idleHours: remove containers not used in X hours (0 = disable)prune.maxAgeDays: remove containers older than X days (0 = disable)

Example:

- Keep busy sessions but cap lifetime:

idleHours: 24,maxAgeDays: 7 - Never prune:

idleHours: 0,maxAgeDays: 0

Security notes

- Hard wall only applies to tools (exec/read/write/edit/apply_patch).

- Host-only tools like browser/camera/canvas are blocked by default.

- Allowing

browserin sandbox breaks isolation (browser runs on host).

Troubleshooting

- Image missing: build with

scripts/sandbox-setup.shor setagents.defaults.sandbox.docker.image. - Container not running: it will auto-create per session on demand.

- Permission errors in sandbox: set

docker.userto a UID:GID that matches your mounted workspace ownership (or chown the workspace folder). - Custom tools not found: OpenClaw runs commands with

sh -lc(login shell), which sources/etc/profileand may reset PATH. Setdocker.env.PATHto prepend your custom tool paths (e.g.,/custom/bin:/usr/local/share/npm-global/bin), or add a script under/etc/profile.d/in your Dockerfile.

Podman

Run the OpenClaw gateway in a rootless Podman container. Uses the same image as Docker (build from the repo Dockerfile).

Requirements

- Podman (rootless)

- Sudo for one-time setup (create user, build image)

Quick start

1. One-time setup (from repo root; creates user, builds image, installs launch script):

./setup-podman.sh

This also creates a minimal ~openclaw/.openclaw/openclaw.json (sets gateway.mode="local") so the gateway can start without running the wizard.

By default the container is not installed as a systemd service, you start it manually (see below). For a production-style setup with auto-start and restarts, install it as a systemd Quadlet user service instead:

./setup-podman.sh --quadlet

(Or set OPENCLAW_PODMAN_QUADLET=1; use --container to install only the container and launch script.)

2. Start gateway (manual, for quick smoke testing):

./scripts/run-openclaw-podman.sh launch

3. Onboarding wizard (e.g. to add channels or providers):

./scripts/run-openclaw-podman.sh launch setup

Then open http://127.0.0.1:18789/ and use the token from ~openclaw/.openclaw/.env (or the value printed by setup).

Systemd (Quadlet, optional)

If you ran ./setup-podman.sh --quadlet (or OPENCLAW_PODMAN_QUADLET=1), a Podman Quadlet unit is installed so the gateway runs as a systemd user service for the openclaw user. The service is enabled and started at the end of setup.

- Start:

sudo systemctl --machine openclaw@ --user start openclaw.service - Stop:

sudo systemctl --machine openclaw@ --user stop openclaw.service - Status:

sudo systemctl --machine openclaw@ --user status openclaw.service - Logs:

sudo journalctl --machine openclaw@ --user -u openclaw.service -f

The quadlet file lives at ~openclaw/.config/containers/systemd/openclaw.container. To change ports or env, edit that file (or the .env it sources), then sudo systemctl --machine openclaw@ --user daemon-reload and restart the service. On boot, the service starts automatically if lingering is enabled for openclaw (setup does this when loginctl is available).

To add quadlet after an initial setup that did not use it, re-run: ./setup-podman.sh --quadlet.

The openclaw user (non-login)

setup-podman.sh creates a dedicated system user openclaw:

-

Shell:

nologin— no interactive login; reduces attack surface. -

Home: e.g.

/home/openclaw— holds~/.openclaw(config, workspace) and the launch scriptrun-openclaw-podman.sh. -

Rootless Podman: The user must have a subuid and subgid range. Many distros assign these automatically when the user is created. If setup prints a warning, add lines to

/etc/subuidand/etc/subgid:openclaw:100000:65536Then start the gateway as that user (e.g. from cron or systemd):

sudo -u openclaw /home/openclaw/run-openclaw-podman.sh sudo -u openclaw /home/openclaw/run-openclaw-podman.sh setup -

Config: Only

openclawand root can access/home/openclaw/.openclaw. To edit config: use the Control UI once the gateway is running, orsudo -u openclaw $EDITOR /home/openclaw/.openclaw/openclaw.json.

Environment and config

- Token: Stored in

~openclaw/.openclaw/.envasOPENCLAW_GATEWAY_TOKEN.setup-podman.shandrun-openclaw-podman.shgenerate it if missing (usesopenssl,python3, orod). - Optional: In that

.envyou can set provider keys (e.g.GROQ_API_KEY,OLLAMA_API_KEY) and other OpenClaw env vars. - Host ports: By default the script maps

18789(gateway) and18790(bridge). Override the host port mapping withOPENCLAW_PODMAN_GATEWAY_HOST_PORTandOPENCLAW_PODMAN_BRIDGE_HOST_PORTwhen launching. - Paths: Host config and workspace default to

~openclaw/.openclawand~openclaw/.openclaw/workspace. Override the host paths used by the launch script withOPENCLAW_CONFIG_DIRandOPENCLAW_WORKSPACE_DIR.

Useful commands

- Logs: With quadlet:

sudo journalctl --machine openclaw@ --user -u openclaw.service -f. With script:sudo -u openclaw podman logs -f openclaw - Stop: With quadlet:

sudo systemctl --machine openclaw@ --user stop openclaw.service. With script:sudo -u openclaw podman stop openclaw - Start again: With quadlet:

sudo systemctl --machine openclaw@ --user start openclaw.service. With script: re-run the launch script orpodman start openclaw - Remove container:

sudo -u openclaw podman rm -f openclaw— config and workspace on the host are kept

Troubleshooting

- Permission denied (EACCES) on config or auth-profiles: The container defaults to

--userns=keep-idand runs as the same uid/gid as the host user running the script. Ensure your hostOPENCLAW_CONFIG_DIRandOPENCLAW_WORKSPACE_DIRare owned by that user. - Gateway start blocked (missing

gateway.mode=local): Ensure~openclaw/.openclaw/openclaw.jsonexists and setsgateway.mode="local".setup-podman.shcreates this file if missing. - Rootless Podman fails for user openclaw: Check

/etc/subuidand/etc/subgidcontain a line foropenclaw(e.g.openclaw:100000:65536). Add it if missing and restart. - Container name in use: The launch script uses

podman run --replace, so the existing container is replaced when you start again. To clean up manually:podman rm -f openclaw. - Script not found when running as openclaw: Ensure

setup-podman.shwas run so thatrun-openclaw-podman.shis copied to openclaw’s home (e.g./home/openclaw/run-openclaw-podman.sh). - Quadlet service not found or fails to start: Run

sudo systemctl --machine openclaw@ --user daemon-reloadafter editing the.containerfile. Quadlet requires cgroups v2:podman info --format '{{.Host.CgroupsVersion}}'should show2.

Optional: run as your own user

To run the gateway as your normal user (no dedicated openclaw user): build the image, create ~/.openclaw/.env with OPENCLAW_GATEWAY_TOKEN, and run the container with --userns=keep-id and mounts to your ~/.openclaw. The launch script is designed for the openclaw-user flow; for a single-user setup you can instead run the podman run command from the script manually, pointing config and workspace to your home. Recommended for most users: use setup-podman.sh and run as the openclaw user so config and process are isolated.

Nix Installation

The recommended way to run OpenClaw with Nix is via nix-openclaw — a batteries-included Home Manager module.

Quick Start

Paste this to your AI agent (Claude, Cursor, etc.):

I want to set up nix-openclaw on my Mac.

Repository: github:openclaw/nix-openclaw

What I need you to do:

1. Check if Determinate Nix is installed (if not, install it)

2. Create a local flake at ~/code/openclaw-local using templates/agent-first/flake.nix

3. Help me create a Telegram bot (@BotFather) and get my chat ID (@userinfobot)

4. Set up secrets (bot token, Anthropic key) - plain files at ~/.secrets/ is fine

5. Fill in the template placeholders and run home-manager switch

6. Verify: launchd running, bot responds to messages

Reference the nix-openclaw README for module options.

📦 Full guide: github.com/openclaw/nix-openclaw

The nix-openclaw repo is the source of truth for Nix installation. This page is just a quick overview.

What you get

- Gateway + macOS app + tools (whisper, spotify, cameras) — all pinned

- Launchd service that survives reboots

- Plugin system with declarative config

- Instant rollback:

home-manager switch --rollback

Nix Mode Runtime Behavior

When OPENCLAW_NIX_MODE=1 is set (automatic with nix-openclaw):

OpenClaw supports a Nix mode that makes configuration deterministic and disables auto-install flows. Enable it by exporting:

OPENCLAW_NIX_MODE=1

On macOS, the GUI app does not automatically inherit shell env vars. You can also enable Nix mode via defaults:

defaults write bot.molt.mac openclaw.nixMode -bool true

Config + state paths

OpenClaw reads JSON5 config from OPENCLAW_CONFIG_PATH and stores mutable data in OPENCLAW_STATE_DIR.

When needed, you can also set OPENCLAW_HOME to control the base home directory used for internal path resolution.

OPENCLAW_HOME(default precedence:HOME/USERPROFILE/os.homedir())OPENCLAW_STATE_DIR(default:~/.openclaw)OPENCLAW_CONFIG_PATH(default:$OPENCLAW_STATE_DIR/openclaw.json)

When running under Nix, set these explicitly to Nix-managed locations so runtime state and config stay out of the immutable store.

Runtime behavior in Nix mode

- Auto-install and self-mutation flows are disabled

- Missing dependencies surface Nix-specific remediation messages

- UI surfaces a read-only Nix mode banner when present

Packaging note (macOS)

The macOS packaging flow expects a stable Info.plist template at:

apps/macos/Sources/OpenClaw/Resources/Info.plist

scripts/package-mac-app.sh copies this template into the app bundle and patches dynamic fields

(bundle ID, version/build, Git SHA, Sparkle keys). This keeps the plist deterministic for SwiftPM

packaging and Nix builds (which do not rely on a full Xcode toolchain).

Related

- nix-openclaw — full setup guide

- Wizard — non-Nix CLI setup

- Docker — containerized setup

Ansible Installation

The recommended way to deploy OpenClaw to production servers is via openclaw-ansible — an automated installer with security-first architecture.

Quick Start

One-command install:

curl -fsSL https://raw.githubusercontent.com/openclaw/openclaw-ansible/main/install.sh | bash

📦 Full guide: github.com/openclaw/openclaw-ansible

The openclaw-ansible repo is the source of truth for Ansible deployment. This page is a quick overview.

What You Get

- 🔒 Firewall-first security: UFW + Docker isolation (only SSH + Tailscale accessible)

- 🔐 Tailscale VPN: Secure remote access without exposing services publicly

- 🐳 Docker: Isolated sandbox containers, localhost-only bindings

- 🛡️ Defense in depth: 4-layer security architecture

- 🚀 One-command setup: Complete deployment in minutes

- 🔧 Systemd integration: Auto-start on boot with hardening

Requirements

- OS: Debian 11+ or Ubuntu 20.04+

- Access: Root or sudo privileges

- Network: Internet connection for package installation

- Ansible: 2.14+ (installed automatically by quick-start script)

What Gets Installed

The Ansible playbook installs and configures:

- Tailscale (mesh VPN for secure remote access)

- UFW firewall (SSH + Tailscale ports only)

- Docker CE + Compose V2 (for agent sandboxes)

- Node.js 22.x + pnpm (runtime dependencies)

- OpenClaw (host-based, not containerized)

- Systemd service (auto-start with security hardening)

Note: The gateway runs directly on the host (not in Docker), but agent sandboxes use Docker for isolation. See Sandboxing for details.

Post-Install Setup

After installation completes, switch to the openclaw user:

sudo -i -u openclaw

The post-install script will guide you through:

- Onboarding wizard: Configure OpenClaw settings

- Provider login: Connect WhatsApp/Telegram/Discord/Signal

- Gateway testing: Verify the installation

- Tailscale setup: Connect to your VPN mesh

Quick commands

# Check service status

sudo systemctl status openclaw

# View live logs

sudo journalctl -u openclaw -f

# Restart gateway

sudo systemctl restart openclaw

# Provider login (run as openclaw user)

sudo -i -u openclaw

openclaw channels login

Security Architecture

4-Layer Defense

- Firewall (UFW): Only SSH (22) + Tailscale (41641/udp) exposed publicly

- VPN (Tailscale): Gateway accessible only via VPN mesh

- Docker Isolation: DOCKER-USER iptables chain prevents external port exposure

- Systemd Hardening: NoNewPrivileges, PrivateTmp, unprivileged user

Verification

Test external attack surface:

nmap -p- YOUR_SERVER_IP

Should show only port 22 (SSH) open. All other services (gateway, Docker) are locked down.

Docker Availability

Docker is installed for agent sandboxes (isolated tool execution), not for running the gateway itself. The gateway binds to localhost only and is accessible via Tailscale VPN.

See Multi-Agent Sandbox & Tools for sandbox configuration.

Manual Installation

If you prefer manual control over the automation:

# 1. Install prerequisites

sudo apt update && sudo apt install -y ansible git

# 2. Clone repository